Nvidia's Failed Attempt to Patent AI-Driven Hints | 06/08/25

Plus Google announces Genie 3, and the Mario Kart AI got nerfed!

The AI and Games Newsletter brings concise and informative discussion on artificial intelligence for video games each and every week. Plus summarising all of our content released across various channels, like our YouTube videos and in-person events like the AI and Games Conference.

You can subscribe to and support AI and Games, with weekly editions appearing in your inbox. If you'd like to work with us on your own games projects, please check out our consulting services. To sponsor, please visit the dedicated sponsorship page.

Happy Wednesday everyone, Tommy Thompson here and it’s time for another issue of the AI and Games newsletter. This week there’s a bunch of stuff in the news, and we’re going to dwell on one that slipped past (most) news outlets in recent weeks, on how Nvidia submitted a patent back in 2021 that was only just rejected by the UK’s patent office.

So what can we expect this week?

Some updates on my movements to Gamescom and PGC Helsinki

Microsoft’s revenues go up, but where is the AI money?

Mario Kart World’s AI gotta nerf.

Google announced the Genie 3 world model.

And more!

Let’s a go!

Follow AI and Games on: BlueSky | YouTube | LinkedIn | TikTok

Announcements

A handful of updates for everyone!

Reviewing Submissions for AI and Games Conference 2025

I just wanted to take a quick moment to thank everyone for their contributions to the open call for submissions to this years AI and Games Conference. Both the number of submissions (up 30% on last year) combined with the overall quality means we have a very difficult couple of weeks ahead. More info as it happens, but I’m very excited about what you can expect to see in the programme!

Let’s Meet at Gamescom

Gamescom is only two weeks away and I still have a mountain of work between then and now. But, I’ve kept some hours free between Tuesday and Thursday for appointments. If you’re interested in working with AI and Games be it on our consultancy and training side, or to be involved in our content creation side of things, then get in touch.

AI and Games @ Pocket Gamer Connect Helsinki

Another week, another speaking engagement announced, with Devcom less than two weeks away, IEEE CoG the week after, and then Nexus Dublin in early October. I’ll be swinging home briefly after Nexus to then fly out to Helsinki to attend the AI GameChangers summit at Pocket Gamer Connects Helsinki on October 7th and 8th. This talk will be similar in content to that which will be at Devcom in just a couple of weeks time.

Note to self: finish writing the Devcom talk…

PGC Helsinki is currently my final talk in the schedule for 2025. I’ll be busy the rest of October putting together our very own AI and Games Conference 2025, and frankly my life isn’t planned past Wednesday November 5th at this point (i.e. the day after the conference ends).

AI (and Games) in the News

Some headlights from the past week worth checking out.

Microsoft Revenues Are Up, But… Where’s the AI Money?

Microsoft posted its fiscal results for the end of 2025, and as reported over on The Verge, the Xbox holder has managed to balance the books quite successfully, with a total of $76.4 billion in revenue in the quarter ending July 30th, bringing it up to a total of $218.7 billion for the full year (up 15% on 2024). This is of course coming right after the layoffs of over 9,000 employees in early July, which has no doubt helped to balance the numbers somewhere down the line as it will reduce operating costs.

The only reason I dig this up, is because companies like Microsoft are always keen to hide specifics. When you dig into the numbers, products such as the Microsoft Surface laptops and even things like Xbox consoles and Game Pass subscribers are never revealed directly, instead it’s reported on how well they’re performing monetarily. For example, we know that ‘Xbox content and services’ are up 12%, without getting into specifics of where that increased performance is coming from. And so it begs the question, where is the AI?

AI performance is no doubt baked into the likes of ‘Azure and other cloud services’ (up 39% year on year). Azure is of course Microsoft’s cloud computing platform, and allows for you to build a variety of online tools and services - which is where Microsoft’s AI services are provided. But I always find it amusing that companies like Microsoft make sure that the AI side of this is never made explicit.

Why? Because Azure is a lot more than just AI, and I suspect that the growth Microsoft is hoping to attain is not as significant yet as they’d hope for it to be. And that assumption is made largely on the basis that if they were making money hand over fist courtesy of AI, then they’d telling us as loudly and as clearly as possible.

Voice Actors Speak Out Against Generative AI

We had not one but two different voice actors had something to say about the state of all things generative AI.

“It’s synthetic. It’s not real.”

A story that received a lot of attention was the comments made by voice actor Neil Newbon, largely known for his work as Astarion in Baldur’s Gate 3, on the viability and applicability of AI for voice acting and performance.

He had the following to say in his interview with the Radio Times Gaming publication released last week:

"You know, I spoke to a lot of devs, I know a lot of devs, and they don't want to use AI because it's shit. It doesn't work very well. It takes a lot of effort to get something that's half decent. Even with the modern things that I've seen recently, it's like, 'Yeah, okay, great. You can make AI, and what?' Where's the joy in it? I’m not interested in that. You can always tell that something's slightly off. It's not right. And even if it comes to a point you can’t tell the difference, so what? Y’know? Why not make it with people? Why not have more fun?"

You can hear Neil’s full comments at around the 25 minute mark of the full interview on the Radio Times Gaming podcast.

“No. Do Not Fucking Do That.”

Short but succinct, the headline is from a quote tweet by Elias Toufexis, largely known among gamers as the voice of Adam Jensen in the modern(ish) Deus Ex titles.

The comment is in relation to an AI-powered mod from 2023 that clones Toufexis’ voice to replace the original recording of Gavin Drea for V, the protagonist of Cyberpunk 2077. While Toufexis does not belabour the point, he does highlight when questioned why that this is using his voice without permission - a recurring problem where data is so readily available that people think they can do so without recourse.

If you’re desperate to hear Elias do his thing, just go play Deus Ex - in fact I’ve still not played Mankind Divided!

The Rock Offered to Deep Fake Himself to Save Money (Rather Than Take a Paycut)

As reported over in the (paywalled) Wall Street Journal, Dwayne ‘I only bought the rights to the Rock in 2024’ Johnson offered to have a deep fake version of his face created during development of the upcoming live action adaptation of Disney’s Moana. The idea being to superimpose the AI-generated version of him onto his stunt double Tanoai Reed - who also happens to be his cousin. The conversation even went so far as having negotiations with AI company Metaphysic - whose work has been used in the likes of Alien: Romulus for spoilery reasons I won’t get into here.

Disney decided to not go this route, with the primary concerns being legal issues - whether they would own the rights to the assets generated - as well as public backlash both from consumers and unions.

The funny thing is the argument was to use this as means to cut costs. Surely the easiest thing is for the man who was the highest paid male actor in Hollywood in 2024 to just… take a pay cut.

Google Announces version 3 of their Genie World Model

The big AI story of the week is that just yesterday Google announced Genie 3, the latest in their efforts to build general purpose ‘world models’ that can simulate interactive environments.

This is similar to the work by Microsoft on the ‘Muse’ project I discussed back in March, in which they’ve trained an AI system that learns how to simulate a 3D environment based on a prompt. It’s also an expansion on the work Google themselves have done elsewhere, including not just the first versions of Genie but also the GameNGen project I discussed in a case study issue of AI and Games earlier in the year.

The updated version of Genie sports a number of innovations, including it being a more general purpose interactive-video style simulation, complete with a higher resolution (going from 360p to 720p), plus the ability to simulate up to multiple minutes in length. You can see some of it in action via the link below, it is very impressive to watch.

While impressive - notably when it simulates real-world rather than stylised environments - it still has a number of limitations. Critically the interaction/action space of the model is quite limited, and like most image generation systems, it cannot consistently generate readable text. But of course the larger issues are that it lacks any real practical means for it to be a game engine - despite Google’s insistence it can be. This was a point we raised in the case study episode linked above.

Down the line I’d perhaps revisit this in an issue of the Digest, but considering all they’ve published is a web page on the DeepMind blog, which lacks any meaningful insight, I’m putting it to the back of the pile for now.

Mario Kart World’s NPC AI Finally Gets a Nerf

Not as big a deal perhaps as some of the other stories of late, but the fact that Nintendo decided to tone down the enemy racer AI in the recent 1.2.0 patch for Mario Kart World is welcomed! As detailed in the official patch notes, the devs have “made COM weaker in everything other than ‘Battle’”.

What does that mean? Well it could mean many things, but for me the big improvement is that racing against AI opponents in higher difficulties such as 150cc becomes really difficult courtesy of their ‘rubber banding’ effect. The Mario Kart series is notorious for how its AI racers are able to spring back into action in many instances despite the significant lead you achieve (they ‘snap back’ to your location, akin to a rubber band). I noticed this on higher difficulties in grand prix and knock out tour, and tempted to go and score off those 3-star 1st place finishes that I’m missing.

Nvidia’s Failed Attempt to Patent AI-Driven Tip Engine

Alrighty, let’s dig into the big story of the week, that came courtesy of a release by the UK’s intellectual property office (IPO) at the beginning of July - with thanks to law firm Lewis Silkin whose comments on it caught my attention (hey, we’re a law newsletter after all, he says only half joking). GPU-manufacturer-cum-AI-company Nvidia filed a patent with the IPO back in 2021 (but dated back to 2020) about the idea of “recommendation generation using one or more neural networks”.

Now as the title implies, the application by the UK IPO has been rejected, and I think that’s perhaps one of the more interesting parts of this. So let’s break it all down and discuss:

What did Nvidia submit back in 2021?

What is the broader application of it?

How does this compare to the current market - i.e. is it viable?

Why did the IPO reject it?

Digging into Application GB2108909.9

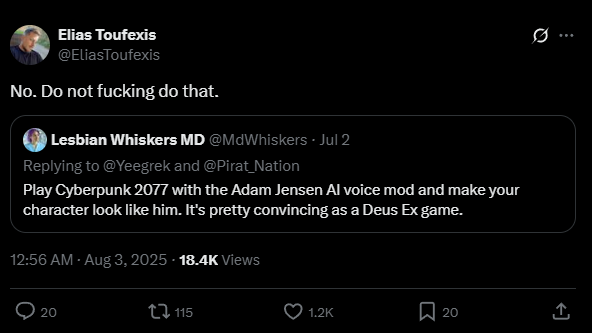

Ok so, Nvidia’s patent submission back in 2021 was intent on filing ownership of a technology where the idea is to create a system that presents recommendations to the end-user using an AI technology. The abstract of the patent is presented below:

A processor that comprises one or more circuits that use at least one neural network to generate recommendations for players based in least in part on cumulative changes of state in the game. The processor receives input data and transforms the data into a plurality of feature vectors corresponding to common schema. The input data may be in the form of a game event data, gameplay data, statistical data, chat data, biometric data or player skill data. The plurality of feature vectors may be encoded into a latent space representing a time window of gameplay representative of the changes of state. The neural networks include a generative adversarial network to accept the latent space to generate the recommendation. Also disclosed a system, a method, a machine-readable medium having a set of instructions stored upon it and a player coaching system for providing recommendations to players.

Let’s dig into what that actually means for anyone who needs a little bit of help reading AI-gibberish:

The patent relates to a piece of hardware (a processor) that would run on Nvidia hardware. This is similar to the neural processing units (NPU’s) that run AI-upscaling technology like DLSS on contemporary GPUs. So I would imagine this is AI software being ran on dedicated AI hardware on an Nvidia GPU.

This AI processor would have loaded onto it a trained neural network - which is the technology that powers most contemporary AI innovations in deep learning and generative AI - that would be responsible for generating recommendations to players in real time.

The neural network is responding to input data from the game, as well as other information relevant to the game that could be utilised to some degree. What follows is some examples based on the terms used above, these are not indicative of what Nvidia sought to do:

Voice chat to give contextual recommendations relevant to a conversation between players.

Biometric data ranging from observing your face on a camera, hearing the tone of your voice, or even tracking motions on controllers. It could even go so far as to measure the duration with which you’ve been active in front of the device (so it could encourage you to take a break).

Game event data such as recent interactions, and your success or failure at achieving specific tasks. But also they mention ‘cumulative changes of state in the game’, so this could mean observing changes of time (e.g. succeeding of failing at specific tasks).

Statistical data that is indicative of either your success in specific contexts, or that of the broader player base.

The neural network is trained such that it interprets the relationships between inputs types and their values . This establishes the feature vectors of the model, and then from this it builds an even more complex relationship of the values of these inputs over time, which is the latent space.

For example, the AI could observe that the decline of health over a fixed period of time is related to the appearance of an enemy NPC and it subsequently attacking the player.

The idea of a time window being used to represent changes in state implies that they are observing what is happening in the game contextually, such that the hints could have some value.

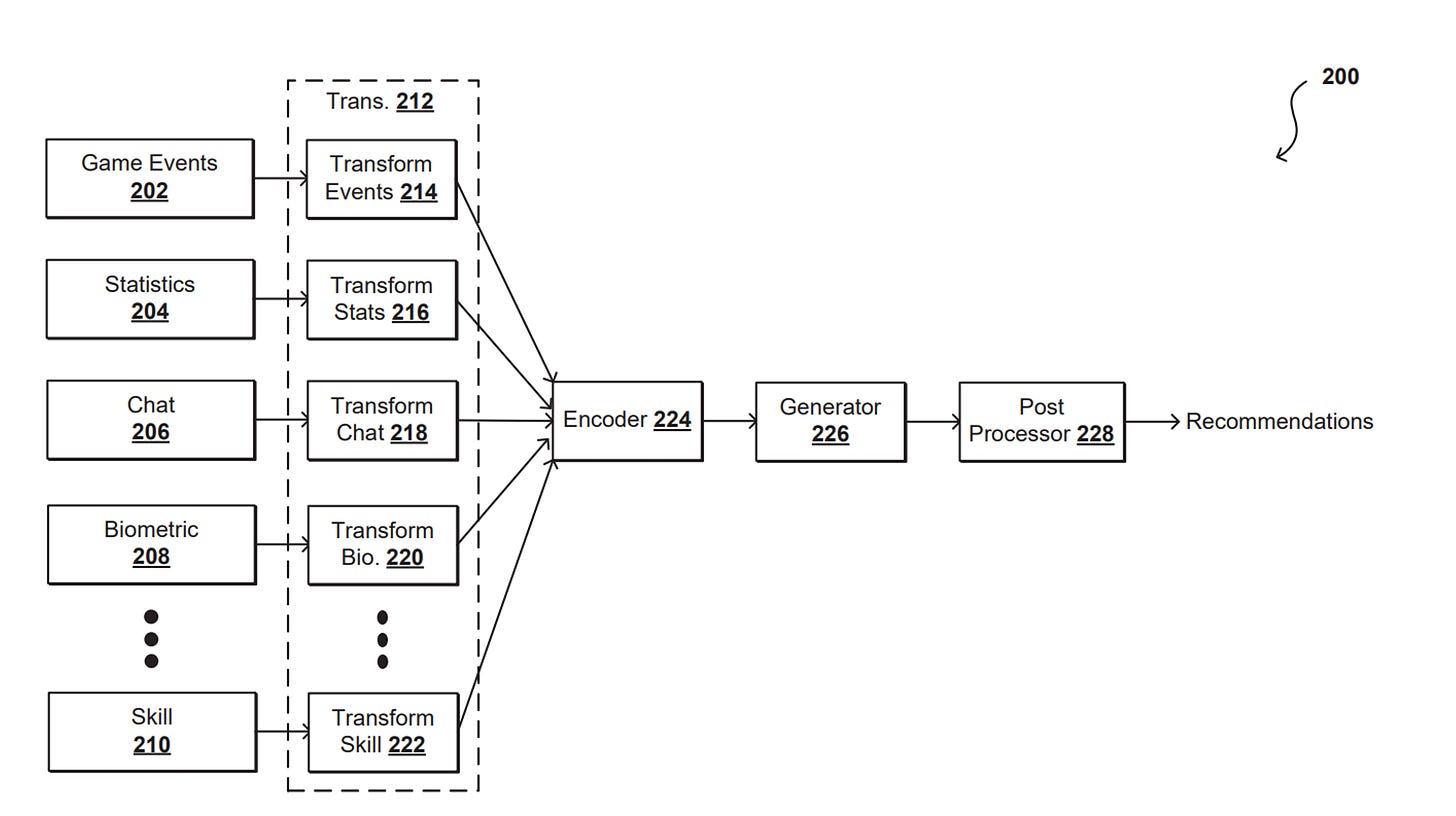

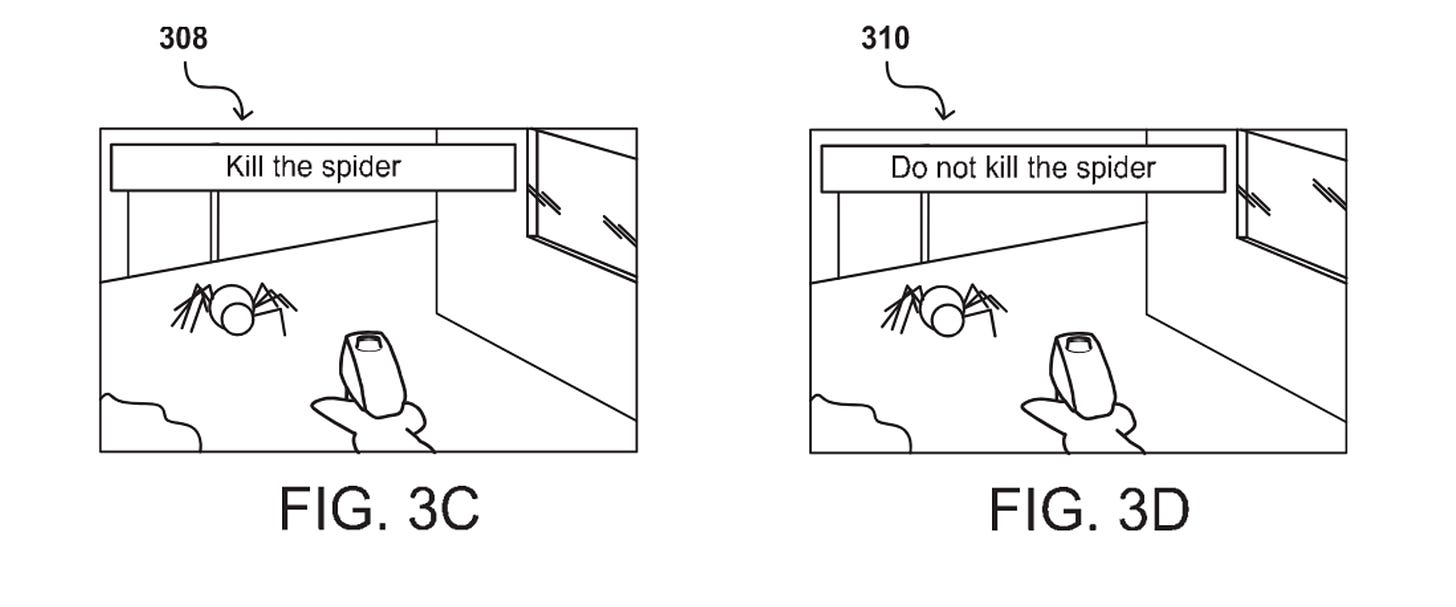

The system would use a specific type of neural network, known as a Generative Adversarial Network (GAN) to generate the recommendations, but the decision of when to generate and provide those tips would come from a separate system.

In the actual submission, Nvidia included several images detailing both the system itself, as well as examples of how this could be applied in practice. As we can see above, the idea is for it to collate all of the relevant information in the current state of the game such that the end user receives a meaningful recommendation.

Put to Practice

So the funny thing about this patent application, is that in the time since its original conception and submission to the IPO, multiple companies - including Nvidia - have subsequently gone off and implemented systems of this style.

In the past year Nvidia has been making noise about Project G-Assist: a technology that runs on GeForce RTX GPUs that is designed to provide technical support for the use of Nvidia GPUs on specific titles. This makes sense as a first step for this type of technology, given it is making recommendations based on the performance of your PC relative to internal benchmarking and testing that Nvidia will have done against specific titles - either on their own or in collaboration with games studios. So in this case it can pull in performance data of the game and then make recommendations on how to optimise performance, and even do some of it for you.

Now this is still rolling out to some degree, and just last week in the newsletter I pointed to the article by James Bentley on PC Gamer who had a lot of difficulty getting it to be of any use. I suspect given it’s hard to successfully encapsulate every possible hardware configuration such that it can operate seamlessly.

G-Assist is but the first step towards making something like this patent operate as a commercial product. One of the issues with this that I even raised in some interviews in the press when it was announced in 2024, is that you need to build a corpus of relevant information for each game that it supports. You can’t just expect an AI to know how to play Cyberpunk 2077 if it has never been fed any information about the game. Though at this point that could be more readily attainable, given of course Cyberpunk 2077 has been on the market since 2020, you could train a model by scraping up all sorts of wiki’s, guides, and everything else in between.

This problem is exacerbated when you then have new titles, given this model would need to be trained sufficiently to understand the context and nuance of that particular game. Of course the preference is to try and generalise these systems so they can operate in any and all games, like DLSS which started out using bespoke trained models for each game, and has since moved into a more generalised upscaling system given they have sufficient data to achieve it. However, from my perspective it would be difficult to do this for context-dependant in-game events and corresponding tips, given the aforementioned feature vectors and latent spaces are going to be unique for different games. Sure, there is going to be significant overlap in specific genres, but the nuances of each game may make it difficult for this to operate on a moment-to-moment, case-by-case basis. The gameplay of titles such as Call of Duty and Battlefield are markedly similar if you squint your eyes a little, but they’re still very unique games in their own right.

This latter issue is no doubt why G-Assist has started on the most vanilla version of the system whereby it acts as technical support. But it is something that could be overcome in time once the process and data is available - and I suspect that Nvidia would have to work more closely with studios during development to make all of that happen.

Meanwhile, it is worth briefly mentioning that Nvidia are not the only company exploring this idea. As discussed a couple of times in the newsletter, most recently in March, Microsoft announced ‘Copilot for Gaming’ where the idea is for to be exactly what is described by the patent: an in-game assistant that is observing what is happening in the current game state and reacting to it.

Why Was it Rejected?

So the big question, is why was the patent rejected? Well, it’s all comes down to how patents work in the context of games. For a little bit of context, per the Patents Act 1977 in the UK, patentable inventions in the very first instance (like literally the first thing in the regulation) is stated as follows (emphasis mine):

1.-(1) A patent may be granted only for an invention in respect of which the following conditions are satisfied ,that is to say —

(a) the invention is new;

(b)it involves an inventive step;

(c)it is capable of industrial application;

(d) the grant of a patent for it is not excluded by subsections (2) and (3) or section 4A below; and references in this Act to a patentable invention shall be construed accordingly

The key argument that Nvidia had made, that the recommendation system was an “inventive step” that falls under section 1(1)(b) above. The argument that needs to be presented for any patent to satisfy the inventive step is that that it achieves something that any person who is skilled in the state of the art of the field (PSA) would be able to determine and understand its relevance. The PSA in this case would be a game designer, who perhaps also relies on a machine learning engineer given they would often not understand the intricacies of the models being built and the underlying science of it all. This was largely accepted and the IPO were happy with it.

However, the part that brought it down was the next step of defining a patentable invention in section 1(2) below (emphasis mine)

1.-(2) It is hereby declared that the following (among other things) are not inventions for the purposes of this Act, that is to say, anything which consists of -

(a) a discovery, scientific theory or mathematical method;

(b) a literary, dramatic, musical or artistic work or any other aesthetic creation whatsoever;

(c) a scheme, rule or method for performing a mental act, playing a game or doing business, or a program for a computer;

(d) the presentation of information

In this instance, the patent failed under section 1(2)(c), that it was considered a means through which for a method of playing a game. Nvidia argued and appealed against this, arguing that the technical innovation of creating the latent space is the real innovation, and not necessarily the end product of its application in various games. That it being technical in nature meant it had more in line with game mechanics, and that use of the tool could be construed as a feature of a game mechanic with a unique method of implementation. This could allow for them to approach patents used by other game companies in previous years (notably Konami and Nintendo) as a basis for their claim.

It’s worth pointing out that patents for games are actually quite rare, given you cannot patent a game mechanic. Rather you patent the implementation of a mechanic or process. It speaks not just to how rare that version of the mechanic is (which is difficult in an industry where everything is derived from something else), but also conceptualising the uniqueness of the method (not the code, but the process) behind building the mechanic.

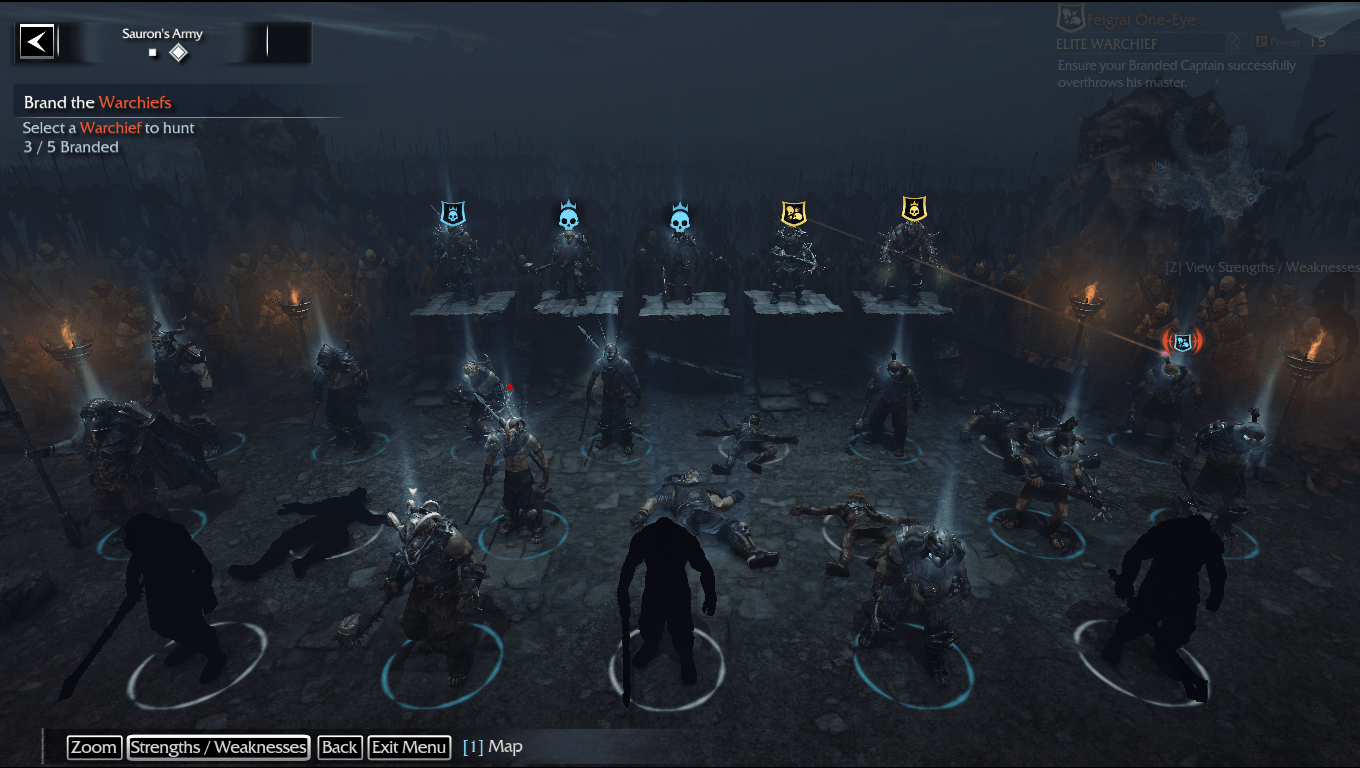

It’s why most games companies tend to have patents on hardware, rather than software, given it’s much harder to define a game mechanic method as unique compared to others, whereas hardware is much easier to define as unique or distinct from other implementations. Game mechanics or systems like the Nemesis system patented by WB Games after its use in Middle-Earth: Shadow of Mordor only work because it’s about the specific way in which it is implemented to achieve a desired affect.

The IPO didn’t feel that what was presented was sufficient to note violate section 1(2)(c) of the act, and so while it was considered inventive, it was considered too broad an implementation of the patent law for it to be distinguishable as unique. For a deeper dive into this process - critically with a lot more legal understanding than me - I once again refer to Aaron Trebble’s article over at the Lewis Silkin website.

Wrapping Up

Ooft, I swear we really need to start sending me to law school. So much of my work is bashing into the law these days - but in a good way, of course!

Thanks for reading, I’m going to crack on with Expedition 33 over my lunch break, but I think we’ll keep the hints turned off. I like to game with a sense of mystery!