"Reputational Risks" Make Headlines | 11/06/25

Plus SAG-AFTRA, the BFI, and join our meet-up in Malmo, Sweden!

The AI and Games Newsletter brings concise and informative discussion on artificial intelligence for video games each and every week. Plus summarising all of our content released across various channels, like our YouTube videos and in-person events like the AI and Games Conference.

You can subscribe to and support AI and Games on Substack, with weekly editions appearing in your inbox. The newsletter is also shared with our audience on LinkedIn. If you’d like to sponsor this newsletter, and get your name in front of our audience of over 6000 readers, please visit our sponsorship enquiries page.

Hello all, and welcome to this week’s edition of the AI and Games newsletter. I’m back from my conference travels - and even a visit to London Tech Week on Monday - and after a busy week announcing the return of the AI and Games Conference this November, I figured let’s catch up with all of the big AI-related news stories that hit the industry in the past couple of weeks.

Follow AI and Games on: BlueSky | YouTube | LinkedIn | TikTok

Announcements

Alrighty, we have a bunch of information for you to catch up on this week.

AI and Games Conference 2025 Update

A big thank you to everyone for the kind words and overall enthusiasm for our announcement of the 2025 AI and Games Conference. As a reminder of some critical bits of information on that front:

We’re running a two-day event on 3rd and 4th November 2025.

Early bird tickets are on sale now, with discounts for students and indie developers.

Tickets are also selling pretty well, around half of our indie allocation for early bird is now gone!

Our call for submissions is live until the end of July.

We’re keen to hear from game developers, researchers, and everyone in between. Be sure to check out the submission form for more information.

We’re also still open to sponsorship for the event, with spaces in our inventory to engage with our audience directly. Feel free to contact me directly if you’re interested.

Quick Announcements

We’ve got not one, not two, but three event announcements to make. Let’s a go!

As mentioned last week, I’ll be attending the Artificial Intelligence and Games Summer School for my third year in a row later this month. This year it has moved to the slightly cooler climate of Malmö in Sweden. Tickets are still live, and I’ll be hosting a panel on the Wednesday (I think). Looking forward to being there. I’ll be around from the 23rd to the 26th.

Plus, we will be hosting an AI and Games meetup while I’m in Malmö from 6pm on Tuesday June 24th over at the Malmö Brewing Co & Taproom. Regardless of whether you’re attending the summer school, we’d love to hang out with the local games industry that evening and enjoy a cold one (alcoholic or otherwise).

While this is just an informal meet-up, we’re asking if people can register at this link so we can get an idea for numbers. That helps us to book some space at the venue and not catch the staff off guard, or have us scrambling for an alternative venue.

I am not presenting at Develop 2025 in Brighton next month, but I am there the whole three days. I am currently working out some stuff for my schedule (we have… ideas), but certainly up for a meeting and chat if you’re around!

If you’re attending either Develop and want to arrange a meeting on how AI and Games can work for you - or how your business can support the work we do - be sure to get in touch via LinkedIn or the button above!

Finally, I’m pleased to announce I’ll be speaking at Devcom 2025 in August, which is held right before Gamescom - one of the biggest events in the gaming calendar. I’ll be talking about the current state of AI in the games industry - what a shocker, who would’ve thunk it? - and I will be in Cologne for much of the week for anyone keen to meet up. More news on that as it happens.

/dev/games 2025 Post-Mortem

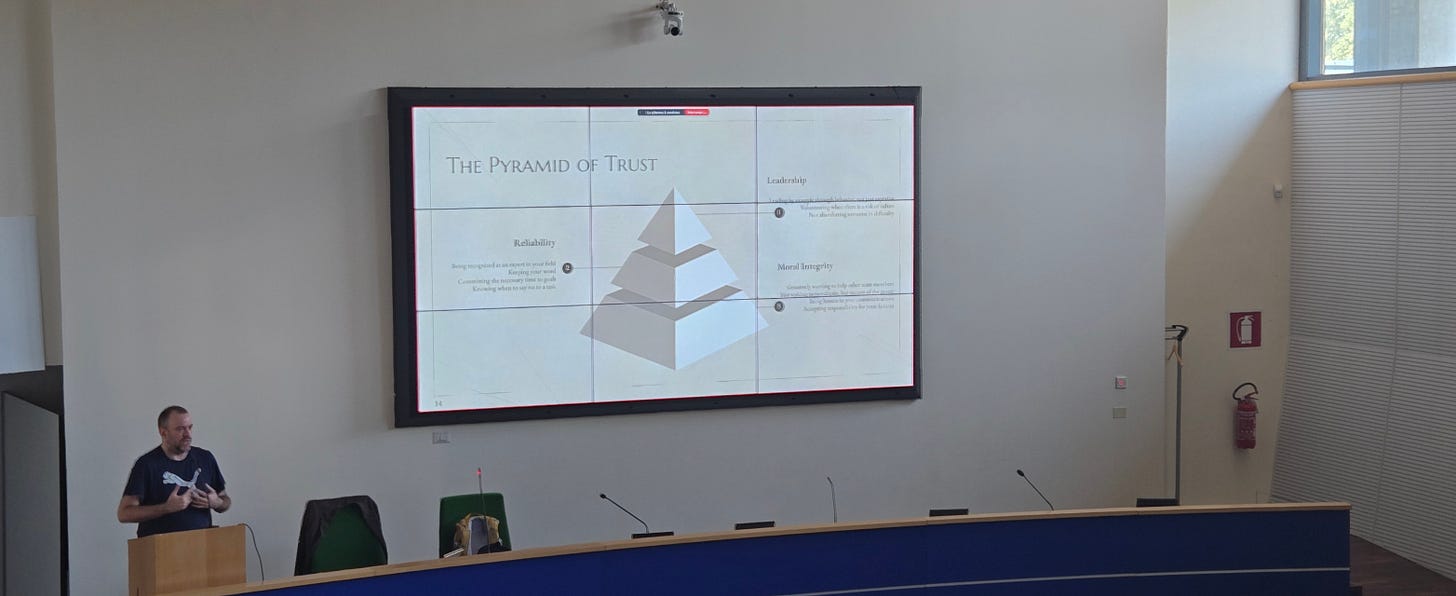

I had a fantastic two days at /dev/games 2025 in Rome last week. I’ve been mentioning that I was going to be at this event for a few weeks now, and it did not disappoint.

Critically this event, like the AI and Games Conference, is still in its infancy and only just ran its second iteration. The team is still finding their feet, but they put together a great programme of talks that highlight the learnings and expertise of the Italian games industry, as well as the amazing work they’re doing both in their own indie scene, as well as in AAA projects and studios.

This is still a relatively unknown event outside of Italy right now, which is a shame given I was really impressed by the enthusiasm and energy of everyone in attendance. I was one of only two speakers from the UK, and everyone was incredibly welcoming, and enthusiastic to chat with us. I really feel like this is an event that will flourish in the years to come. So I’m using my platform to get some eyeballs on this, and encourage other devs - particularly in the UK - to think about supporting these kinds of community engagements. One thing that has became readily apparent to me this past year as I’ve attended everything from Nexus in Dublin, to Konsoll in Bergen, and GDS Prague, is that the UK industry - despite its many challenges - is healthier than many of our European friends, and we have a lot of expertise and insight we can bring not just to support these communities, but find ways for us to work together.

A big thank you to Andrea Tucci, Franco Milicchio and the whole organisational team who took great care of me. Plus to all the other speakers and attendees who I had some great conversations with - some of which ran long into the night. I hope to be back next year, and maybe drag some more UK folks with me - George E. Osborn, fancy a trip to Rome next June?

All the News, in Brief

So of course, we had some relatively quiet games industry weeks in the build up to my vacation. Allowing me to spend more time focussing on stories such as what is happening in the UK copyright debate. But of course, as soon as I take two weeks off, everything happened!

We have legal battles, middleware announcements, AI exploitation, generative AI misfires, and more. It’s too much for me to dig into in significant detail. So for this issue I summarise these stories, and then we’re going to return to some of these at a later date.

Havok Celebrates 25 Years with Fee Restructure

While largely known for their work in physics, Havok middleware provides a variety of high quality AI navigation tools - it’s why they’re a perfect fit as sponsors of the AI and Games Conference 2025!

To reach 25 years as a middleware tool in the games industry is no small feat, though critically they are now owned by Microsoft. Havok is one of the biggest players in AI navigation middleware, and were even at the Game AI Summit this year at GDC as Richard Kogelnig highlighted the efforts made for large scale navigation across open-world environments - something that the engine is put to good use in games such as Elden Ring.

They’re celebrate 25 years with a big change to their royalty system. While the tool is still expensive for smaller studios, the move towards higher royalty thresholds and a starting fee of $50,000 shows a desire to start moving away from their existing economic structure. It will be interesting to see whether they move towards supporting indie studios, given their tools can be a real game changer for many a company.

The UK Government and the Ongoing AI Debate

Throughout the year I’ve posted several updates on the state of the AI government and their discussions on AI integration with regulation and broader society. We’ve since had some updates.

The Data Use and Access Bill (i.e. AI in the UK Law)

The first is that in a recent newsletter I talked about how efforts in the Data Use and Access Bill to enforce copyright protections against tech companies seeking to scrape assets. As discussed in a brief follow-up, all of the motions towards ensuring transparency of AI training and protections of copyright (i.e. the stuff we as creatives and just regular punters care about) were voted down. However, it had to go through one more round in the House of Lords and be accepted prior to royal assent (i.e. becoming law). It has now been rejected by the House of Lords, again. With the critical part of AI transparency - meaning the public right to know what data AI systems have been trained upon - being brought to the forefront once more.

NOTE: It’s worth highlighting - particularly for folks outside the UK - that while the House of Commons is the chamber of the acting government, the House of Lords is meant to hold the government to account. Naturally it being politics, that doesn’t always work. But hey, it seems like something right is happening this time.

Check out the link below to part of Baroness Kidron’s closing speech discussing the matter. It’s fairly ridiculous that a Baroness is speaking more sensibly of the will of the people than the UK government itself, but hey here we are.

Keir Starmer’s Not Doing the Research

I’ve raised it in previous issues, but the good Prime Minister of the UK has been prone to drinking the AI Kool Aid of late - all the more impressive when you remember Kool Aid don’t exist in ol’Blighty.

Rosa Curling has written up a good overview of the problem at Democracy for Sale. Including not just the conversation on AI, but also how US company Palantir - who have a far from rosy track record - were issued the NHS contract without tender. I wouldn’t mind being in that position, good lord. You know how long it takes to get contracts signed off when you’re working for government and similar organisations? Months I tell you, months!

As I mentioned in my initial post on the AI issues in UK law, the Prime Minister appears to fall under the belief that by giving all of the big tech companies what they want - carte blanche on the ability to train and exploit UK assets for their broader gains - this will result in a greater prosperity for all, like some technology-driven trickle-down economics.

Y’know, that economic model that has never actually worked in practice?

Meanwhile, the BFI Have Done the Reading

Right as I was on the train over to London Tech Week on Monday, I was reading through the BFI’s report on AI in the broader creative industries, and their suggestions for how to move forward, entitled AI in the Screen Sector: Perspectives and Paths Forward.

This is arguably the most significant and high-profile analysis of AI in the creative industries in the UK has came from any significant trade or organisation representative, and for the most part it’s pretty decent. My views don’t align with the report 100%, but it is certainly a step in the right direction.

However, the weirdest part for sure, is when you spot that my video and article how AI is used in the games industry (2024 edition) is referenced in the report.

Hey BFI, I have opinions on AI in the creative industries, you’re going to hear a lot more of them in the coming weeks. Let’s chat shall we?

Nick Clegg’s Back Again

As the House of Lords pushes back against underdelivering legislation, Meta’s former head of public affairs, and former liberal politician (ha!) Nick Clegg has re-emerged to discuss the current state of the AI in the UK.

Over in a piece in The Times he highlighted, like many other tech giants have in recent years, that it’s simply impossible for AI to operate without legal concessions provided to them. Put simply, if copyright law isn’t bending to serve big tech, then AI as an industry simply cannot exist.

It’s worth clarifying how misleading this statement and his broader assessments of the sector are. Given what Clegg is really saying is that the AI tools created by the likes of Meta, Microsoft and Open AI are neither attainable nor sustainable without the theft of copyrighted materials. Even if they licensed the material from copyright holders, right now there isn’t a big enough ‘industry’ for these companies to turn it into a profit.

That isn’t to say (generative) AI cannot succeed. These models can be curated at much smaller scale, and in a more cost effective manner - particularly if you own a corpus of existing data to build from. But hey, don’t let the facts get in the way of a poorly constructed argument.

Stability AI vs Getty Images - Live from the High Court

Last thing coming out of the UK is that this week a court case is kicking off between Stability AI - creators of image generator Stable Diffusion - and Getty Images, the stock media company. The latter is accusing the former of exploiting their image library without consent or compensation for use in training their image generators.

What does this have to do with games? Right now, nothing. But these are the cases we need to pay close attention to, given they’re prone to establishing legal precedents on how these things play out in the future. Plus, this is a legal case that is running here in the UK, unlike most others that are playing out in the US courts (because Stability.ai is a UK business). It’ll be interesting to see in the long run whether there is alignment, or not, on this topic on both sides of the Atlantic.

I wonder what Nick Clegg thinks about this one…

Hold Their Lite Beer…

Don’t worry, if you thought this news segment was too UK-focussed, the US has had plenty of things going on that are worthy of the spotlight.

Legislation on State AI Regulation

After hearing it being bounced around for a few weeks, the push by the Republican legislators to ban any form of AI legislation on a state level, is moving forward. This was a crafty bit of work - as is often the case in US politics - where a motion to ban individual states from legislating on AI for ten years was snuck into the 2025 budget reconciliatory bill back in May. This is double crafty, given you can’t really strike down the budget bills, thus making it easier to get this past the first marker.

The long and short of this, is that the Trump administration and Big Tech have been schmoozing a lot of late, and it would be convenient if the acting powers could lay out a series of regulations that ensure that the law continues to work in favour as they seek to build and maintain their position as the world leaders on AI. So the proposal is quite simple: the US government would try to prevent any individual state drafting, finalising and enforcing legislation on AI in any capacity whatsoever. To quote the bill:

“no State or political subdivision thereof may enforce any law or regulation regulating artificial intelligence models, artificial intelligence systems, or automated decision systems during the 10-year period beginning on the date of the enactment of this Act.”

That is, as the kids say, rather undemocratic.

It’s doesn’t take much effort to start putting together a narrative of why this is occurring. After all, virtually all of the Big Tech companies - the likes of Apple, Google, OpenAI, Meta, Tesla - are based in the democratic state of California, which is currently putting forward numerous forms of legislation against the very companies that it incubates. In reality it doesn’t seem like that this would hold ground in the long run. After all legislation by the government that prevents states from acting without due cause for a period of multiple administrations is the textbook definition of ‘overreach’. But it may take time in the courts for it all to be fought out.

You can read a great breakdown on this over on Blood in the Machine at the link below.

Ex-US Copyright Office Director Sues The Trump Administration

Let’s end our US stories on a high note, a couple of weeks back I highlighted that the US Copyright Office had came out speaking in favour of creatives in the pre-publication of their report on AI. Only for the director, Shira Perlmutter, to then be fired by Trump within 24 hours?

Well, as has increasingly become the way of things of late, a lawsuit has been filed against the Trump administration stating that their dismissal can only be made by the US Congress. On yersel’ Shira.

GMTK’s Voice is Cloned by AI - Amusing Nobody

As someone who is a voice on the internet, I’ve already had companies try to advocate for the ability to clone my voice without my permissions as a good thing - and I have threatened legal action against two of them. But these were businesses hoping to make a few bucks, not individuals hoping to… actually I still don’t really get what the point of this was.

Mark Brown, the author of the ever-popular Game Maker's Toolkit on Substack and YouTube, sadly had to contend with a random person on the internet training an AI voice to mimic Mark, and published their own YouTube video with this fake voice narrating it.

Fortunately after a couple of days the content was taken down, but it again speaks to the fundamental issue that people can go ahead and clone your voice for their own personal interests. This is exactly what SAG-AFTRA have been striking over (more on that in a second), in that right now games companies hope to utilise these assets without needing to have it validated by the original person - or compensate them for it.

Not to mention this is getting into the realms of public defamation as an individuals voice is used to make them say something they otherwise might not. I know full well how important it is to protect this aspect of my work (after all, I talk a lot on the subject of AI in game development). This is undoubtedly the same for Mark who is a trusted voice in the game development community. Disgusting.

Learn a Lot and Get Angry About Generative AI

This cropped up on my feed and I had such a good laugh I figured it was worth sharing. Game developer Sos Sosowski, who is an eclectic individual at the best of times, presented a talk entitled “FUCK AI” at AMAZE over in Berlin.

Now I don’t think we could accuse Sos of burying the lede. However, what this talk does is give an accessible on-ramp to understanding generative AI technologies, and why the current trends within them are largely driven by systems that are not guaranteed to be accurate and not sustainable without theft (oh boy, a recurring point in the news today). I had a fun exchange with Sos afterwards on BlueSky, and it’s clear we have shared views on a lot of this. It’s just rare that the Scottish guy is the more subdued one!

SAG-AFTRA Reach Tentative Agreement with Games Companies

After the best part of a year of strikes, SAGF-AFTRA announced late on Monday (June 9th) that they’ve reached a tentative agreement with games companies, and are moving to a review stage for their board that could lead to it being ratified.

To quote SAG-AFTRA president Fran Drescher (yes, from The Nanny):

“Our video game performers stood strong against the biggest employers in one of the world’s most lucrative industries. Their incredible courage and persistence, combined with the tireless work of our negotiating committee, has at last secured a deal. The needle has been moved forward and we are much better off than before. As soon as this is ratified we roll up our sleeves and begin to plan the next negotiation. Every contract is a work in progress and progress is the name of the game.”

As a reminder, SAG-AFTRA have been striking since July with video game studios, and it was all down to one critical element. 24 of 25 conditions were agreed, but the last one was on the adoption of AI and the union made the right call to stand against the employers demands. My summary of it all is right here.

This is a rather significant step, and fingers crossed it results in a positive outcome.

Microsoft Acknowledges Israel Connections Following Boycott

It was mentioned back in April that a boycott had been raised against Microsoft, and more specifically Xbox, by the Palestinian BDS National Committee (BNC). This is due to the sale of Microsoft technologies to the Israeli military as part of their broader campaign of genocide in Palestine.

Now Microsoft has came forward and confirmed that yes, they have indeed sold their technology - and critically their AI tools - to the Israeli military. However they insist it is for broader military operations, and not to "target or harm people in the conflict in Gaza." An interesting distinction to draw.

EA and Take Two Acknowledge ‘Reputational Risks’ of Generative AI

For the past year, I’ve argued that there is significant risk in continuing to participate in the AI conversation with speculative and non-committal engagements. Far too often I hear businesses advocate for (generative) AI, without saying or presenting anything of value. Heck, I spent Monday this week at London Tech Week and the term ‘AI’ was being thrown around with reckless abandon. I don’t think anyone on stage talking about it had a clue what any of it meant.

As reported by Jason Schreier on Bloomberg, EA and Take-Two are now warning shareholders of the potential reputational risks of AI as part of their broader strategy. For one thing it’s worth raising that this is common: boards need to identify to shareholders and potential investors what risks exist in parts of their work and broader strategy. However, it does highlight that the well has been poisoned by an over-emphasis on this subject matter, only to repeatedly under deliver.

I mentioned last year that EA’s execs really dropped the ball last year with their investor day, when their talk about ‘AI’ meant exclusively generative AI. But despite all this rhetoric, the demos and samples they showed were basic and rather lacklustre - not to mention I’m confident that they weren’t real either. This was made worse by how they actively ignored and dismissed a lot of the good work they already do in AI for game development - some of which appeared but a month later at our very own 2024 AI and Games Conference.

Businesses really need to start showing meaningful and useful applications of the technology if they don’t want to have consumers simply dismiss it as an effort to be cheap and deliver poor quality experiences.

Oh, speaking of which…

That Darth Vader Thing…

Back in January I made my predictions on how AI would roll out throughout 2025, and one of the big points I made was that someone, somewhere, was going to put their foot in it.

Of course I said that the same week NVIDIA’s generative face-rendering tech caught much-deserved heat for the white washing and 'beautifying’ of the individual it was rendering. However, it seems that we’ve now gone one step further with the release of the AI-voiced Darth Vader in Fortnite.

The long and short of it is a Darth Vader NPC has been dropped into Fortnite, and it can participate in conversation with players courtesy of Google’s Gemini LLM crafting responses which are then voiced courtesy of a model cloning the late James Earl Jones courtesy of ElevenLabs. The voice itself is achieved given Jones consented back in 2022 for his voice to be made available prior for future technologies.

Of course within mere hours, the internet was flooded with examples of Darth Vader saying things that were misogynistic, racist, or simply beyond the realms of really what the Dark Lord of the Sith should be saying. While Darth Vader is everyone’s brand-friendly space-fascist, I’m beginning to think the version in Fortnite doesn’t quite align with the branding guidelines of old.

I’m not sure what the response was that Epic, Disney, ElevenLabs, and Google were hoping for. But I can guarantee this isn’t it. They have since patched out the issues, or so they say. Despite general dismay at this, and SAG-AFTRA being enraged by it, at the State of Unreal event Epic highlighted they’re keen to double down on this and will be providing tools for chat-based NPCs in Unreal Editor for Fortnite - which I’m sure will go smoothly.

I have a lot to say on this, I’m coming back to it for sure!

Wrapping Up

Wooft. Like I said, a lot happened in a very short period of time while I was offline. Two weeks is apparently a long time when it coincides with your vacation huh? I’ll be revisiting many of these topics in the coming weeks both in the main newsletter and subscriber-only digests.

Thanks for reading and your continued support, and I’ll be back next week!