Game AI - the use of AI for gameplay - is facing a real test to its future.

A broader industry failure to understand the uniqueness of the field.

How the risk-averse nature of the games industry is stifling game AI growth.

Why generative AI is not the solution to game AI’s problems.

The AI and Games Newsletter brings concise and informative discussion on artificial intelligence for video games each and every week. Plus summarising all of our content released across various channels, like our YouTube videos and in-person events like the AI and Games Conference.

You can subscribe to and support AI and Games, with weekly editions appearing in your inbox. If you'd like to work with us on your own games projects, please check out our consulting services. To sponsor, please visit the dedicated sponsorship page.

Greetings one and all, and welcome to the AI and Games newsletter. We are but days away from the AI and Games Conference returning to London on November 3rd and 4th and you catch me today as I am knee deep in preparation.

For this week’s edition of the newsletter, I decided it was time to get something off my chest. With our fellow AI developers descending on London in the coming days, I have had a growing concern in my mind about where this whole ‘AI in games’ thing is going, and more specifically in game AI - the process of using AI to create non-player characters and other gameplay experiences. I have a lot feelings on the matter, and it’s time I got them off my chest.

While we have more recently started running issues of The Take for premium subscribers where I get to discuss my broader industry musings, this one warranted not just that I deliver it outside of the paywall, but that it be broken up into multiple parts - it wound up being a very lengthy piece. So grab a coffee and strap in, as this is one of the longest issues I’ve ever written - and it isn’t even half of what I planned to discuss.

A Word From Our Sponsor

This week’s edition of the AI and Games newsletter is brought to you courtesy of the good folks over at Shibboleth.

Shibboleth is a B2B lead generation and inbound marketing agency exclusively for games brands. From game art studios to game development outsourcers to game dev tech & middleware, Shibboleth helps innovative games brands to generate more business and skyrocket their growth.

You can get a free consultation today by heading over to: https://weareshibboleth.com

Follow AI and Games on: BlueSky | YouTube | LinkedIn | TikTok

Announcements

This week’s announcement segment is largely about the conference, but also one or two other things.

Catch The Latest Episode of Artifacts

It’s been a hot minute since our YouTube series Artifacts - which focussed on procedural content generation - has received any love. There is a healthy backlog of topics to cover, but I am glad to say we got a brand new episode out last week looking at the typing survival horror game 'Blood Typers’. A game where players need to solve puzzles, and survive against hordes of zombies and other monstrosities, but you do it all entirely by typing words on the keyboard.

We get into the weeds on how a game originally prototyped for the Game Maker's Toolkit on Substack Game Jam became a fully fledged game, how building levels works - with dev footage throughout - plus an insight into why they let the system make ‘broken’ levels, and then fix them during generation, rather than prevent that from happening.

A big thank you to the devs at Outer Brain Studios, Alex and Dmitry, who gave up their time (right before pushing a huge update to Steam) to talk about the game. We’ll have a written version on the website in the next week or so, but as you can imagine things are pretty hectic around here right now and I haven’t had a chance to tidy it up. But if you enjoy videos, then go click on this one!

Our 2025 Halloween Stream is Condemned!

Tune in on Thursday October 30th at 7pm GMT (i.e. tomorrow night) for this years Halloween livestream - where I play a spooky game that the audience votes for. The votes came in from our YouTube audience and the decision was made to have me play Condemned: Criminal Origins from 2005.

Going in cold, I have no clue what it’s going to be like, and I’m looking forward to it.

AI and Games Conference 2025

With less than a week to go, we still have a few tickets left on sale for our big event. It’s a lesson learned from last year where we sold out two weeks in advance due to hitting capacity. So this year we have a setup that should guarantee everyone can attend if they want to.

Be sure to head over to www.aiandgamesconference.com to learn more about the programme, with two days of talks and panels from the leading minds in AI for game development. A quick reminder of what we have planned:

Presentations from leading industry players such as Ubisoft, Electronic Arts, CD Projekt RED, Warhorse Studios, Sports Interactive, Avalanche Studios.

Emerging players in the generative AI space such as BitPart.ai and Meaning Machine.

Exciting new research from Queen Mary University of London, University of Windsor, and Wentworth Institute of Technology.

Discussions of the legal implications of AI for games from Lewis Silkin LLP, and a panel on games investment from Curran Games Agency.

All this and presentations from our sponsors: AWS for Games, Creative Assembly, Havok, Riot Games, Arm, Databricks and Raw Power Labs.

Monday Night Reception

We look forward to welcoming our delegates to a reception at New Cross House in London just around the corner from our hosts Goldsmiths, University of London. This will be running from 6pm-10pm, and badges are required for entry. So if you’re only arriving into London on Monday, be sure to swing by the venue first to grab your pass before the evening’s entertainment kicks off!

New Merchandise for 2025

Last year we gave away free pins to all of our attendees of our company mascot, while also selling out the allotment of extra t-shirts for attendees to buy. It’s been awesome to attend other events this year and bump into developers wearing our stuff. It’s super cool.

We’ll have a new pin to give away for all attendees, but don’t worry, you can grab last years, plus a bonus one at our merch stand. Alongside that will be a new 2025 conference t-shirt and an exclusive 2025 coffee mug too!

But now, it’s time to reveal a very special secret that we’ve been sitting on for the past six months, that our premium subscribers only became aware of a couple weeks back. As AI and Games received a big rebrand in early 2023, I realised how emotionally attached I’d became to our little logo mascot. To a point that when I had graphic designer Andy Carolan refresh the logo, I didn’t just double down on keeping the wee monster (*ahem* space invader) design, I finally gave it a name: Crit

Crit has been with us since the very first logo for AI and Games, and has received not just a glow-up, but a more polished and business-savvy white version (also by Carolan) that we use for the conference logo. Then on top of this, I asked my friend Helen O’Dell, a games concept artist, to draw up some cartoon versions of Crit which are used for everything from Discord emojis to promoting AI and Games on various corners of the internet.

And so after many emails behind the scenes, we took the cartoon version of Crit, and yeah… we made a plushie!

This is largely the handiwork (read: mischievous dealings) of Matthias and Sally. The latter of the two somehow always having ‘a guy’ who can execute on whatever crazy ideas we cook up. I love it. I’ve had the prototype sitting on my desk for the past six months and I knew as soon as I laid eyes on it, that I wanted us to offer them up to our community! We subsequently had a limited run ordered and shipped slowly but surely by sea freight from our manufacturers in China.

You can grab your very own Crit plushie next week at the AI and Games Conference where you can see them in all their magnificent glory, and take one home with you.

If you can’t make it to London next week, but would love to get your hands on one, we have a limited number that will be available for sale online in early 2026 to be shipped out across the world.

Sign up to our waitlist to be the first to know when Crit is available to pre-order online!

So freakin’ cute!!!

Game AI’s Existential Crisis: Part 1 - The Challenges Faced in Modern Game AI

Game AI, the practice of implementing artificial intelligence to craft the experience of players in video games, is facing an existential crisis.

A crisis of confidence in technology.

A crisis that, at its heart, is at about perils of scale and complexity.

A crisis of handling risk in a land of the risk-averse.

A crisis of lost knowledge, and of forgotten values.

A crisis born of a niche that exists for a single purpose, in a world that seldom acknowledges its existence.

The process and study of how we use artificial intelligence in games, as a means to craft fun, engaging, and memorable experiences, faces a threat to its survival. We’re at an interesting inflection point in the games industry as a whole, and while game AI is sharing in many of these challenges with other disciplines, there are many problems faced by this corner of game development that are unique to it.

This is Not a Rant About Generative AI, but it is one of the factors that has led to my writing this piece. Rather this is a sentiment that has been brewing in my mind for the past couple of years, and I figured it was time I put it all down in writing, and gave it a voice. By making my thoughts on this a little clearer, I want to drive a discussion in our community over how we address it.

This Thesis is a Reflection of the Fragility of the Video Games Industry. I think a lot about how the games industry is this big, expansive, and powerful sector, but it is equally one that is often fraught with challenges, and relies on inconsistent and unreliable strategies in how it funds projects, how it nurtures talent, and how it builds technology.

For me, as someone who has spent well over a decade researching, building, and communicating the intricacies of AI for the games industry, I think Game AI currently has some very specific challenges:

Addressing how risk consumes our capability for experimentation.

Assessing how we design to systems, rather than design for purpose.

Considering how knowledge is exchanged, paywalled, or lost.

Discussing how our communities, while wonderful, are fraught and threatened by the world they exist within, and the voices louder than them.

This is a Challenge We Can Address Together: We will figure this out, that of which I am certain. I meet many people across the sector who share in the values that we have both here at AI and Games, alongside my friends who run Game AI Events CIC with me - organisers of the AI and Games Conference.

We have the capacity to make this happen, but in order for us to combat the issue, it needs to be brought into the light.

A Vision of the Future

Back in 2024 it was the 10 year anniversary of the AI and Games YouTube channel, and I made some videos to celebrate the occasion. First I reflected on how things had changed in the decade that had past: both in the field but also for me personally and professionally - as I had somehow built my own little enterprise off the back of a modestly successful YouTube channel. Then I made a second video sharing stories of how some of our YouTube episodes were made, and how some videos led to my working on other projects with various businesses and organisations.

The plan was always to make one final video. Having looked ten years into the past, I wanted to look ten years into the future. A future for AI in game development where we explore this particular niche of the sector in new and exciting ways that empower developers, and in-turn bring new and fun experiences to players.

But every time I tried to put it to (virtual) paper. It rang hollow.

I attempted to write it on three separate occasions in the space of around nine months, and every time felt forced, bordering on naïve. But perhaps the most concerning aspect, was the more I thought about it, I didn’t like where my thoughts led me.

It’s sat in the back of my head for the past year or so, and it was only in the spring of this year, upon returning from GDC, a single statement took shape in my mind:

“Game AI faces an existential crisis.”

Since then I’ve shared my concerns with peers in the industry, and that’s when it began to take shape, as I lay out my argument, and people nodded in agreement. What has really helped put the icing on the cake, has been my more public efforts to engage in the dialogue on where AI is headed in the sector. Be it with this newsletter, my public speaking, and my friends and I founding a non-profit organisation to run our conference.

I’m going to walk through this thesis in three specific areas:

First, discussing the challenges of Game AI itself as a methodology in game development.

Secondly, how education is a real challenge both within the community and for new generations of developers.

Lastly, the issues we face in bringing that community together as the world continues to spin.

For this first part, we will start by helping set the scene for anyone not familiar with game AI as a concept, and then digging into the challenges that the field faces. Then in future parts, we dig into the challenges faced surrounding education and how our community is going through upheaval.

Finally, we will summarise a lot of our recommendations, and critically what we across both AI and Games Ltd (who run this newsletter, our YouTube channel, and conduct consultancy and professional training) and Game AI Events CIC (who run our conference) are doing to try and address them.

Game AI: Explained

Okay, so before I get farther in, you may be new to me, my work and all things AI and Games, so here’s a quick primer on what Game AI is, and why I’m talking about it. If you’re already familiar with game AI - or are a regular follower of my work - odds are you can go ahead and skip this segment!

Game AI is the practice of using artificial intelligence to facilitate part of the experience of playing a video game. This can manifest in a game in a variety of different ways:

Non-player characters who appear as opponents, enemies, or allies in a game. This can range from:

Cannon fodder in shooters like Halo, Call of Duty, and Tom Clancy’s The Division.

Antagonistic enemies like in horror games like Resident Evil: Village, Amnesia: The Bunker or Alien: Isolation.

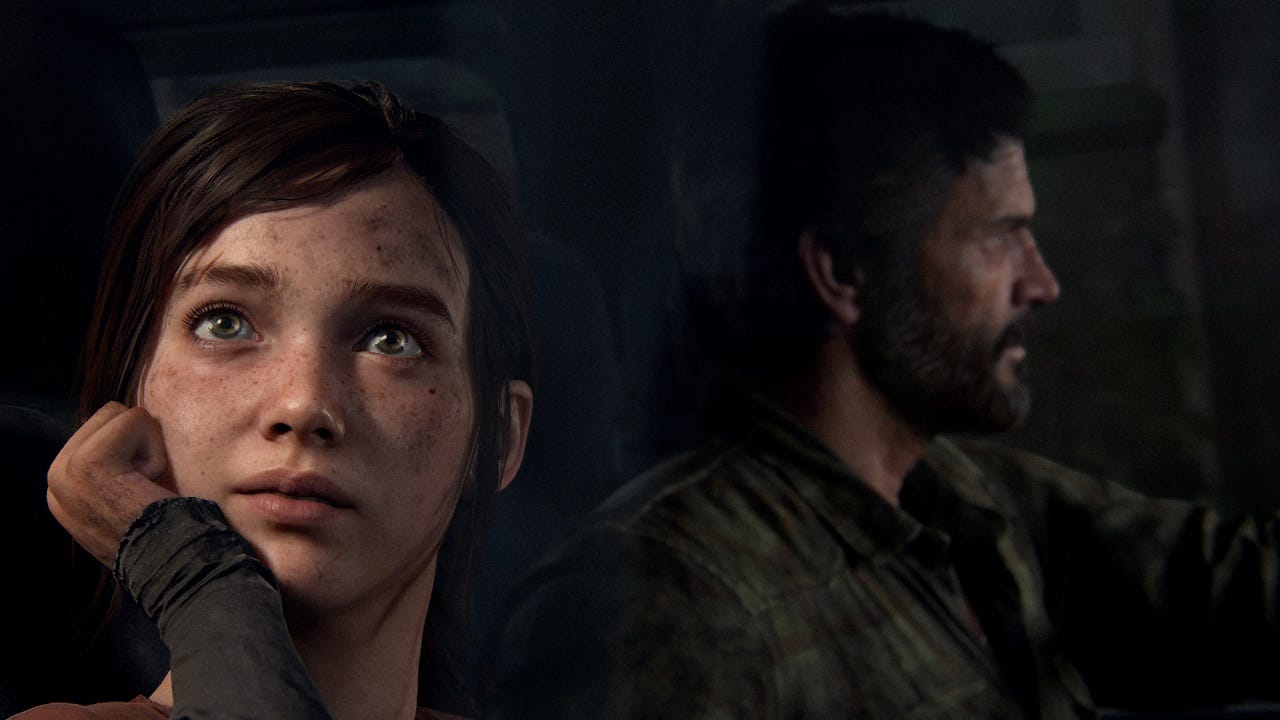

Companions as seen in games like The Last of Us, Half-Life, or BioShock: Infinite

We use it for navigating complex environments in games:

Not just for combat NPCs, but incidental characters in Cities: Skylines, plus the likes of vehicles and other relevant game objects.

We build custom solutions that handle complex open-worlds in games like Elden Ring and Sea of Thieves.

It is used for strategic opponents in games.

Real-Time Strategy games like Total War or StarCraft run game AI systems to make decisions on what individual units do over time.

Similarly this can be seen in tactics games like X-COM, Gears: Tactics, and Into the Breach.

Even deck builders like Hearthstone or Marvel Snap have game AI systems that act as bot players, typically to support novices getting into the game.

But also gameplay systems that support the experience in ways players seldom think about.

Director systems in the likes of Left 4 Dead help ensure gameplay remains tight, consistent and engaging.

Combat management systems in the likes of the Batman: Arkham or Spider-Man games help ensure combat feels fresh but not overwhelming.

Open-world games like Far Cry and Assassin’s Creed manage the spawning of NPCs such that they appear in interesting situations, and don’t waste compute resource.

Plus, there’s an argument to be made - and I will make it - that procedural content generation falls under this remit.

A remit of algorithmic decision making governed by designer logic in an effort to create content (levels, characters, quests etc.)

To do all of these things, we rely on a variety of tools, techniques, and methodologies to make it happen: Finite State Machines, Behaviour Trees, Automated Planning, Navigation Meshes and algorithms, Utility systems, Belief/Desire/Intention (BDI) models, and many more. I’ll forego explaining what each of these do - after all I’ve documented this at great length over the past decade or so on YouTube and on our website - but the important part here is that virtually all of it relies on technologies that are increasingly no longer in vogue.

Machine Learning: A Primer

Writing this piece now in 2025, when we talk about artificial intelligence, we are most commonly talking about Machine Learning (ML). Many of the contemporary applications of AI, be it in drug discovery, social media analytics and recommendations, or more recently the boom in generative AI models such as GPT and Midjourney, are built using Machine Learning.

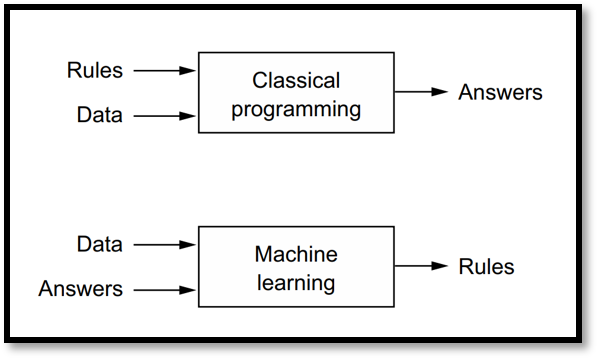

Machine learning is a process of training an AI model based on data we have about a problem, such that it learn the intricacies of that problem so it can solve new problems just like it. So you train a model for image recognition, such that it can then identify future images. Similarly, generative AI processes large numbers of examples of text or images, such that it can then produce new text, or new images.

To help distil this a little further, I often like to turn to this explanation from François Chollet in his book ‘Deep Learning with Python’, in which he highlights that machine learning differs from ‘classical programming’ if you observe it as a black box. In classical programming we provide the rules of a problem and the data that reflects our current scenario. We then program a system to find answers. So if you take for example a game of Chess: we feed the algorithm the rules of the game plus rules on how it should play (ideally with some degree of skill), and the current state of the board and we will typically search for an answer that gets us closer to winning the game.

Machine learning is different, in that we give it examples of playthroughs of the game, such that it can learn the rules, and ideally it will often learn the rules such that we can give it new and often more complex versions of the problem, and it just ‘knows’ what to do, given it has learned all the intricacies of the domain. This is often very powerful, because it is very hard for humans to be able to formulate every rule we could need for every scenario. While equally, we often have not had the same level of experience as the ML algorithm: given it will often learn by simulating playthroughs often millions of times to learn whether the rules it has established are correct.

Game AI is Often Powered by Symbolic AI

Game AI - for the most part - does not rely on machine learning. Rather, it is powered by symbolic AI (or even just ‘Artificial Intelligence): a field of computing that relies on representing knowledge about problems using high-level symbols and logic. We humans define the logic to express the rules of the system, and then our game AI systems either make decisions by apply specific rules, or searching within the defined logic to find an answer to its problems.

In the context of games, that means we often express the logic for a character in a game that makes sense for us both as developers and designers to interpret it, while also making it computationally tractable for a computer to process it. So for example, if you’re playing a first person shooter, we’re paying attention to the distance of enemy NPCs to the player, whether they can see the player, how much resource they have or whether they are near other relevant resources (weapons, vehicles etc.). It may have a goal it is trying to execute (ranging from killing the player to finding a resource, or moving to cover) and we find the appropriate actions to take in that moment such that it can achieve that goal - maybe not now, but in a future point in time.

Symbolic AI is often given the moniker of ‘good old-fashioned AI’, because when AI became an applied science in the 1950’s, it was the first thing that we realised actually worked. Machine learning was conceived right around that same time, but one could argue it didn’t really hit its stride and prove successful until the deep learning boom of the early 2010’s. But in the meantime, we went through generations of successes with symbolic AI, as it proved vital in areas such as robotics and autonomous vehicles, power-station management, business logistics and of course, in video game development.

This is not to say Machine learning does not bring any value to game development. Far from it. As detailed in our breakdown of how AI is used in the games industry, we have found a variety of ways in which to use machine learning, but most of it isn’t in game AI. Systems such as Gran Turismo 7’s Sophy or Forza’s Drivatar have shown that using machine learning can bring a lot of value to players. These are two great examples of my earlier point in that trying to distil the logic or rules of fast-paced race car driving is often harder and more challenging for a human than training the ML model to learn it for us.

Meanwhile we have learned to embrace ML in a variety of areas of video game development and production, ranging from matchmaking algorithms in Dota2 and Marvel Rivals, to cheat detection in Call of Duty or PlayerUnknown’s Battlegrounds (PUBG), to gameplay testing in Candy Crush Saga. Plus while in 2025 the conversation over generative AI’s value to the industry is ongoing, we’ve used it already in the likes of texture upscaling for games like Mass Effect: Legendary Edition and God of War: Ragnarok and of course in super-sampling technologies such as Nvidia’s DLSS and AMD’s FSR.

Game AI is Seldom About Optimality

Perhaps one of the biggest things most people fail to grasp about game AI, is it’s one of the few corners of artificial intelligence where the emphasis is not on achieving a level of accuracy or optimality. If we think about everything from logistics to image recognition, regardless of the technique our ambition is for the AI to make the best decisions possible.

But for game AI what is the ‘best’ decision is often highly subjective.

We want NPCs and other AI opponents to make interesting decisions, but interesting is not necessarily optimal. An enemy in a game should be taking actions that lead to fun gameplay moments, and often that means making them fallible, and leaving room for error. This is one of many reasons why symbolic AI is best suited to these problems, given designers can express what they want characters to do in specific moments that give you an opportunity - be it to defeat them, avoid them, or complete other in-game objectives.

Even aspects of game AI where (sub)optimality is still important, such as navigation, still need to feel appropriately designed within confines of the game world. Hence we have NPCs avoid running through water, or stumbling across rocky terrain, because while it might the most optimal in terms of distance, it feels strategically, or performatively out of place.

Game AI is a Craft Rather Than a Process

A point I’ve made over the years be it in presentations or otherwise, is that I often equate game AI to theatre. It’s about crafting an experience that makes sense within confines of the game you are playing. That doesn’t mean it’s guaranteed to be realistic - because again, we’re interested in creating fun - a highly subjective concept - within fantastical scenarios. But it needs to make sense in the world it exists within, and reinforces the fantasy as a further axis through which the all-desired but oft-unattainable ‘immersion’ begins to take shape.

We have to think a lot about how these characters should not just exist within these fantastical worlds, but also react to the players actions. Expanding on the theatre analogy, the added complexity of game AI is that the player is not only the audience, but an active participant: meaning we need to not only ensure they’re seeing interesting events take place, but we then must ensure we can respond to how they then engage with them such that it feels like they exist within that space.

Naturally we don’t always get that right, but that’s half the battle.

On top of all of this, we then need to express many of these systems to players in ways that they can interpret them, and respond to those signals in kind. Hence a lot of work in game AI has overlaps in animation, in character art, in visual effects, in narrative design and in sound design. As we rely on the support from other corners of the development team to express the ‘thought processes’ if you will of game AI systems to the end user. Often in an exagerrated format, much like theatre, because we’re expecting players to rely on the same behaviour recognition and processing faculties that use in every day life, but as means to react to an algorithmically constructed scenario.

The Game AI Problem

So to get into the meat of my thesis, I want to talk first about the immediate future of game AI, and some of the concerns that have rattled around in my head the past few years. These largely fall under four specific themes:

Game AI techniques as they stand are struggling under the weight of contemporary game design.

There is a need for ongoing experimentation in game AI methods, but game productions seldom have the time to explore new ideas.

As a result, we confine our thinking to designing against known systems, rather than design systems for purpose.

Amongst all of this, generative AI is often incorrectly held aloft as a solution, failing to understand what the real problems are, and what benefits machine learning could bring to this sector.

Now before I get into the weeds here, I want to take a moment to express unreservedly that this is neither a reflection, nor a condemnation of the hard work of so many programmers, designers, and other developers who build these systems out there in the games industry. One of the most rewarding aspects of what I do, be it to communicate game development to a broader audience, to support studios in building their own games, or heck… make my own game AI systems, is engaging with so many amazingly smart and talented individuals. Many of whom I consider colleagues and friends. Making games is difficult, and every game that makes it out there in the wild is a real victory. My argument here is more a reflection of our industry, and how we exist within it. That the situation we are in is brought more by the broader business factors of the sector than the performance of individual contributors.

In truth, what I’m talking about is risk: about the need to mitigate and manage risk. The games industry has always been interesting to me in that it’s one of the most innovative and forward-facing technology sectors. But on the software side, games have always been one of the most risk-averse of all the creative sectors.

This is largely due to games being an expensive, yet speculative proposition. Every new game is full of unknowns, of whether a particular design idea will work, whether a feature resonates with players. But even prior to that, of knowing what is the right solution to a problem, of knowing what is the best approach to take to deal with the challenges we face.

The Scale and Complexity of Modern Gaming

I often think about how the game AI techniques we use today, are the same ones that we’ve been using for 20 years. I often mention in talks that if you plot the history of game AI, the most important period is from 1995 to around 2005. During that time we had:

3D games building off of the ideas of DOOM, leading not just to id Software’s subsequent release Quake in 1996, but the likes of Goldeneye 007, Half-Life and Thief: The Dark Project. Refining aspects of character behaviour, sensory design, goal selection, and navigation.

Strategy games ranging from Command and Conquer to Shogun: Total War helped establish the need for modular systems to handle various tasks.

Early experimentation in machine learning in games like Creatures and Black & White helped establish the technology was not ready for prime time.

Simulations games such as, well, The Sims, leading to adoption of utility and BDI systems to help manage competing interests and interacting with a complex environment.

The age of ground-breaking first-person shooters in the early 2000’s led to techniques such as Behaviour Trees courtesy of Halo 2 and Goal Oriented Action Planning thanks to F.E.A.R.

In summary, many of the Game AI techniques we still use today were largely conceived and invented during this window. With a handful solidifying their worth in later years, with the likes of HTN planning courtesy of Guerrilla’s work in Killzone and the Horizon games. And more recently State Trees in Unreal Engine are beginning to take shape as a potential replacement or companion to Behaviour Trees.

Now these systems continue to serve us well. After all many a game is shipped every year using these techniques, and we continue to find new ways to utilise them. But it’s a little concerning that the bulk of our game AI methodologies are now so old. In many respects they were built to support games from 20 years ago, rather than the games we build now. In an era where graphics pipelines, animation systems, entity component systems, and networking frameworks, have changed and evolved from one generation to the next, game AI has not truly evolved since 2005.

It leads to an interesting situation where for decades we have developers working to find ways to use old ideas to fit into this larger scope. Of building larger and more complex architectures that often merge one or more of these technologies together, all in an effort to achieve a desirable level of functionality. Meanwhile players are left wondering why the visuals of their games have evolved significantly, but the NPCs in the game world are retaining a level of intelligence exhibited in games almost 20 years ago.

It’s a question that gets levelled at me by my friends and family who are not game developers: why does it feel like nothing has changed? What about all of these recent innovations in AI - surely they could lead to some sort of evolutionary breakthrough in behaviour in the past 5-10 years?

An important point I will raise now, only for it recur later, is that games are best perceived as a sum of their parts. Regardless of the level of visual fidelity, players complain when the writing is poor, the controls feel stiff, or the 101 other ways that the game may feel lacking. With game AI you can have characters that look great, with slick animation and great modelling and texturing, but it falls apart when they act in ways incongruent with the world, or too smart to a point of unfair. This is an evergreen concern of game AI design, but as games become more complex, the minimum barrier of quality continues to increase, and there’s no silver bullet to address these issues..

To the first question raised a moment ago, of why does it feel like nothing has changed? I’d argue it’s understandable they feel this, even if the observation was incorrect. Things have changed significantly in that time, but I’d argue players seldom notice it given that achieving the status quo is a challenge in and of itself.

Richer Games are Bigger Problems

If you were to compare the original Assassin’s Creed from 2007, to 2025’s Assassin’s Creed: Shadows, the scale and complexity of the game’s design has exploded - and all of that has an impact on the game AI systems. Be it in understanding and processing the real estate of the game world, to the number of gameplay systems NPCs are expected to recognise, and interact with. Trying to achieve the same base level of intelligence in Shadows is a much larger task than the original game. The 2007 title had a much more limited scope, but equally it had far lower expectations from players - we forget that Assassin’s Creed was quite ground-breaking on its release. Not to mention that the player’s agency is far more vast in Shadows versus the original, which also has an impact on how flexible and engaging these systems are expected to be.

In fact I write this as we’re about to have not one, but two talks on the AI of Assassin’s Creed: Shadows take place at the AI and Games Conference. The first detailing the need to balance combat systems across a variety of unique NPC types, and the latter a larger conversation on the use of planning AI. These are far from solved problems, and often we need to find ways to get the old ideas to work once again as the scope of the problem increases. Every new mechanic that gives players greater agency adds to the complexity of these systems, and they’re expected to keep up the pace!

Handling NPCs at Scale

Meanwhile there is an ongoing challenge of how to address handling so many NPCs at once, often in large-scale simulations that players are becoming increasingly more immersed in. When game worlds can now render and process hundreds of in-game characters at once, how do we ensure that those NPCs are always doing something sensible? Or perhaps more accurately, how do we convince players the NPCs were doing something sensible both when unobserved, and when once again in the players view?

This is something that games ranging from Cities Skylines, to Watch Dogs: Legion have explored in various capacities, and it continues to be an evergreen problem. With presentations by Avalanche, Ubisoft, and Warhorse Studios at this years conference on this issue.

It’s a challenge of resource: I need to make sure NPCs are constantly making decisions that:

Make sense in context of the game and their goals.

Can be processed quickly with limited CPU budget.

Can often be processed in areas of the game not loaded into memory.

Players often fail to grasp that when you’re in a vast open world, so much of the game is not active and loaded in. As a result we often have to simulate what the rest of the game world outside of the player’s purview is, but do so in a cost-effective fashion so that you don’t experience performance hits in your game because the ‘world is thinking’.

The Perils of Scale

We exist in an age where games continue to increase in scale, be it in virtual space, or in mechanic and dynamic complexity. While game AI handles these issues well enough, it comes with it a number of challenges as the complexity of these systems increase. There is a need to experiment, not just to find new ways to repurpose and rework old ideas, but also whether the ever changing landscape of AI can lead us to new innovations that can work in lieu of, or in tandem, with these existing methods.

The Need for Experimentation

There is most certainly experimentation ongoing within the sector - we wouldn’t have people presenting about their work at events if they weren’t. I’d say over the past decade or so the industry has done a great job of finding new spins on existing ideas to help with specific design challenges. I think of Vladislav Iansevitch’s fantastic talk at our 2024 conference on Warhammer 40,000: Space Marine 2, which as I detailed in the episode of AI and Games on YouTube, that every innovation built for the game was taking existing systems from other games, and reworking them to fit the design of their xeno-smashing simulator.

It speaks to how most studios experiment, by taking existing ideas, and fitting or revising them to fit their needs. This is of course, a smart and sensible way to go about things. Particularly when you have a team of developers familiar with those techniques.

I recall when I wrote about hosting/attending the AI Summit at GDC in 2024, there were more talks from studios revising spatial and navigation systems than there was on the adoption of Large Language Models (LLMs). Now of course I had a hand in that - I helped curate the talks as a member of the advisory board - but it made sense to include them because it was reflective of the conversation in our community in the moment. Games like The Hunt: Showdown, Warframe, and Suicide Squad: Kill the Justice League were all revising navigation systems, or building custom ones, to service their game design. Clearly this speaks to a broader challenge, and individual efforts by studios to meet it.

This is the product of that experimentation, in that it results in us attending conferences to share our experiences, and have that conversation. It’s why game developers conferences are so valuable. I don’t say that to big up our own endeavours, but rather to reinforce their importance in the global development landscape, given - as we will discuss in a future part of this thesis - those events and our ability to share and exchange information is under threat.

When Experimentation Meets Budgets

But what if a larger or more complex effort of research is required? It’s not often a game that makes it to market is given the time or budget, to allow for such creative affordances. After all, games need to ship, and I feel like game AI in particular is treated as such a known commodity, it is not given the same level of diligence and resource from a budgetary side of things for experimentation and innovation to take place - unless it aligns with investor narratives.

It’s notable that the two big games in development in recent years where NPC AI systems were at the forefront of their design were subsequently cancelled. Monolith’s Wonder Woman and Cliffhangar Games’ Black Panther titles were both exploring the same idea of establishing relationships between NPCs such that it changes the structure and experience of the game as you play it (i.e. the nemesis system).

For what it’s worth, I’ve heard from numerous sources about what those games where shaping up to be, and they sounded like really interesting experiences - in fact the stuff I heard about Wonder Woman really felt fresh in a way that truly excited me. But they were complex, and expensive propositions. They needed time to flesh out the concept and have it be cohesive, but for two large studios owned by larger corporations, that proved unviable given their desires to cut costs, and maintain a more efficient profit margin.

Naturally there are 101 other things going on that affected those productions, but you wonder what level of leeway would be given if those game AI systems were treated in the same way as investors and C-Suite discuss generative AI?

Just because these are older and established technologies doesn’t mean they just work out the box. The strength of symbolic AI is that it relies on human designers to encapsulate the ‘thought space’ (state space) of the problems they seek to address, and then design algorithms to explore them. But this is also their inherent weakness, in that it often leads to massive explosions of complexity that need to explored and corralled. While machine learning can and could help in this situation, it often robs us of the ability to control it as finely as is needed for game design.

But that’s just for big experimental ideas, sometimes we don’t get enough time to just do the basics.

When Bare Minimum Meets Budgets

As I said earlier: making games is difficult. Sometimes the time spent investing in ideas and technology are what will to lead to larger returns in the future. This was readily apparent last year when I sat down with Dr Jeff Orkin for a conversation about the development of the AI of F.E.A.R. Monolith’s 2005 first-person shooter has been highly celebrated these past 20 years, and I’m really proud of the retrospective we put out as a result - even if the closure of the studio this year has left a horrid taste in the mouth. Check it out if you haven’t already, I’m really proud of it. But it’s funny that there was one part of that interview that caught the attention of myself and other developers.

Jeff told me Monolith spent about a year in pre-production on what would eventually become F.E.A.R., and used that time to rewrite their ‘Lithtech’ engine such that it could address the needs of their upcoming project. To that end, he spent a year largely uninterrupted focussing on writing new game AI systems, including both a navigation mesh systems (which is now standard in most game engines) plus the adoption of a forward planning system that would later become Goal Oriented Action Planning.

One of the recurring conversations I’ve had with game developers over the past year who watched that vide is they were blown away - jealous even - that Jeff had a year just to focus on designing new tech. The ability to spend 12 months largely without additional concerns, researching an idea that existed outside of games, and then employing it in a game engine, seems fanciful in the modern day.

As I said earlier, more often than not, it’s less about experimenting with new ideas, but finding new ways to integrate existing ideas into new design. But that is only if you have even that kind of time available. Just weeks ago I spoke with some developers who worked on a well-received AAA game in 2025 who told me they simply didn’t have time to experiment. Their team was small, their resources tight, and the timeframe ever looming. Just getting it done, and ensuring the AI was passably good, was all they could afford to do - and as discussed just that minimum barrier to quality is being set ever higher courtesy of the design of the games themselves. To their credit, I think they did a great job and I’m having a great time playing [REDACTED].

And that’s coming from AAA. I often doubt I am going to see really experimental ideas for game AI coming from indie, because making great AI for games requires time, and expertise, and resource. There’s a reason most AAA studios have dedicated AI teams. You need a team of people working largely on these systems - often in conjunction with gameplay and other elements - over that multi-year development period just to get it to the level of quality they can. It’s why any time I’ve been asked to consult, or help write AI for games, it’s been with indie studios. Because these teams - while highly skilled and very competent - are far more constrained in resource, and seldom have a dedicated AI programmer. As a result a common issue that emerges is they build game AI systems that work, but are not particularly engaging. Hence I’ve consulted on several games in the past couple of years, all of them from indie teams, where their NPC AI is missing that something special, and I’ve gave advice and guidance and how to address it.

But all that said, it’s indie spaces where generative AI experimentation is really shining through, given they’re moving much faster than a AAA production, but that’s a point for later.

Designing to Systems, Not Designing for Purpose

Given developers are reliant on tried and true techniques, I often wonder whether there is a risk that we avoid ideas that would prove complex for those approaches to solve them.

This was a thought I’ve had bouncing around in my head to some degree. I had not quite put my finger on it exactly. However, it was affirmed to me when a former AI lead of a AAA studio, someone who has led the game AI teams during the development of games we have covered on AI and Games in the past, put it to me quite succinctly: there is a risk we over-design to fit game AI systems.

What I mean by that is we build AI to behave in ways that make sense both in terms of existing game designs, but also in how we develop them in the backend. Naturally we want to be able to transpose a game idea from scribbles on a notepad to a functional gameplay system, but the argument is we’re making that easier for ourselves because when we design game AI - we design it with the knowledge of what methodology or technique we’re going to use in the future.

This ultimately breeds conformity, because we’re stopping ourselves from thinking outside of the box of what we could do, and coming up not just with new ways to approach existing problems, but designing new approaches for potentially new problems.

I think all of these things, of a lack of experimentation, of designing to systems, speaks to the realities of shipping games. It’s an expensive and time-consuming endeavour, and we’re aware that the clock is running down and the budget is running out. Hence as I stressed earlier, this is less to do with developers themselves, but more a result of the economics that surround a lot of game development. The success of the industry and the ever increasing scope of games being released breeds a sense of conformity: that we stick to known principles, perhaps even play around with them to create new ideas, but we stay within the parameters of what we have because there’s little space to do otherwise.

And so as complexity increases, and costs continue to go up, what a perfect time for a speculative technology that claims to fix it all. That with a magic bullet we’re going to make it all work out.

Generative AI Is Not The Solution

Since OpenAI released GPT4 back in 2023 we’ve spent the past couple of years being besieged with the idea that generative AI is going to fundamentally change the video games industry.

Now this is an argument that is complex, nuanced, and in many respects presented in bad faith. I don’t have the time to focus on that in this piece. This article is long enough as it is. That topic will get is own rant, and I suspect soon.

But there are a bunch of problems to address with the generative AI boom, while also acknowledging the things that it could actually be useful for.

Stakeholders are keen to revise game AI history to suit their needs.

Understanding there’s generative AI as a technology, and generative AI as an industry.

That when used correctly, it can lead to interesting applications.

Generative AI cannot replace game AI, but it could be an asset.

The Reframing of Game AI

Far too often the narrative around game AI is conveniently rewritten by generative AI advocates. That essentially everything in NPCs is ‘just rules’ until now, with generative AI being a solution for living breathing ecosystems. In most instances what they’re really saying is ‘NPCs can have conversations now’, courtesy of large language models (LLMs). The argument is often made that what has come before ‘is not real AI’: a point used to avoid having a more nuanced conversation about the issues facing the sector, because the focus is on getting attention, and potentially investment.

But when we get into the details, I tend to see the same familiar tropes. When we hear about the future of NPC design with generative AI, it doesn’t deal with the problems that game AI handles on a daily basis…

I never hear new AI start-ups talk about handling edge cases in navigation like in Death Stranding.

I never hear how we can build new spatial awareness systems to handle open world systems for games like in Suicide Squad

I never hear how generative AI can make for engaging search and hunt behaviours for the like of say the upcoming Splinter Cell reboot.

Or how it could manage appropriate combat distances and other relevant game balancing for titles like Assassin’s Creed Shadows.

Or how we can use this technology to handle the thousands of NPCs running performatively in Kingdom Come Deliverance 2.

Instead, the loudest of AI hype merchants try to reframe game AI as the things generative AI is more likely to address successfully. It then leads to us talking about the same things over and over again…

Non-player characters that can utter realistic and emergent dialogue.

Of running emergent narrative systems that can generate infinite stories.

Of crafting NPCs that can react to human players through natural language processing.

This of course doesn’t cover the full gamut of ideas being raised - given much of the conversation is on cost cutting and changing existing paradigms - but let me stress for right now I’m focussing purely on game AI here. Now note that none of these things are topics I’ve raised thus far in this piece, and that’s because while they are a challenge, they often don’t really fall under what game AI tackles.

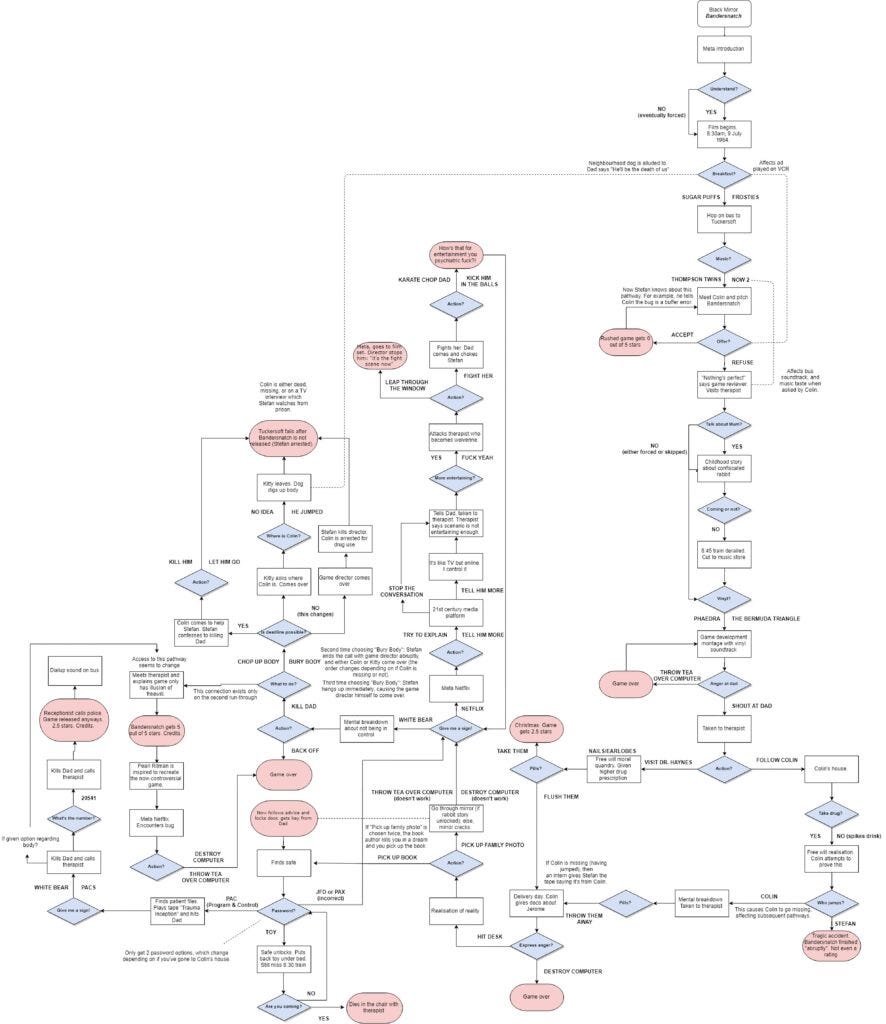

A big part of that is that the two main themes above, of story generation and natural language processing, have historically been difficult to build, impossible to scale, and challenging to implement. We have attempted to do this over the years in a variety of games, even in an era where AI techniques were not proving as effective in these spaces, with games like Versu and Façade attempting to make this a reality 20 years ago, often using symbolic AI methods like planning because ML-based natural language processing was simply nowhere near as good as it is today.

But in each of those cases, they required a significant amount of structure. Of building systems that lead to natural language interaction, and as we’ll see in a second, almost all of the generative AI systems that are actually succeeding are reliant on similar ideas.

Dare I say it, the best examples of AI-native games, where generative AI is being used for gameplay, rely on game AI to make them work. A point I shall return to in a moment.

Generative AI: The Industry vs The Technology

Now I’ve stressed this in my talks in recent months, but it is important to delineate between generative AI as a technology versus the industry trying to sell it to you.

Generative AI, as a more modern iteration of context-aware and sensitive machine learning models is a real innovation, and as we’ll discuss in a moment has the potential to be useful in game AI, but across game development as a whole. Ultimately, do I believe generative AI has value.

But…

It’s important I stress this opinion rests on two key caveats:

Generative AI works best with a specific problem, with a definable solution.

Good generative AI is the antithesis of what the AI industry is selling you.

Specific Problems / Definable Outcomes

I mentioned in my talk at Devcom back in August, that two of my favourite examples of generative AI adoption in the past year have came from EA and Activision. Broader issues with those companies aside, I look at things like the AgentMerge tool for identifying redundant Jira tickets in Battlefield, and the multi-modal retrieval model for finding assets in the Call of Duty engine as great examples of generative AI being used to address very specific production process problems.

Generative AI - like all machine learning - works best when you build a specific model to address a particular problem. But the broader AI industry doesn’t want you doing that. They want you to buy their one-size-fits-all tools.

Be Wary of the AI ‘Industry’

One of the greatest innovations of generative AI, is that it can handle increasingly larger and more complex amounts of training data without the models collapsing or failing to generalise. That is a real AI innovation. Sadly, it’s an innovation that is all too easy to exploit, given data can be fed at such astronomical scale it leads to the likes of GPT, Claude, Stable Diffusion, Midjourney and many other ‘mass market’ generative models that exploit the works of others without renumeration - a gambit that the internet has provided for those willing to exploit it.

But equally you can build your own generative models on a laptop provided you know the problem you’re trying to solve, and understand how to keep it tightly scoped. I mean we just reported on the ML Move project earlier this month, in which a Counter-Strike bot powered by a transformer (i.e. LLM, kinda) took 90 minutes to train and was 21MB in size. If you know the problem you’re trying to solve, you train a model for that problem.

The important thing to grasp is the ability for mass market models to be built is what has lead to a generative AI ‘industry’ that is investing hundreds of billions of dollars, largely propping up the S&P500, for a venture that thus far has no definable path to profit. Everyone is killing flies with bazookas because that’s the narrative that’s been established. Because the belief is that by creating these systems at this scale it offers a silver bullet to the knowledge economy, that is inherently flawed due to how generative models work.

When Structure Meets (Context-Aware) Chaos

The idea that generative AI can ‘fix’ game AI speaks to a fundamental misinterpretation of what machine learning is good at. As discussed earlier, when I need to find the ‘rules’ of my problems, or they’re too difficult to express, I can train an ML model. But more often than not in game AI, I know what my rules are, but I need to be able to reinforce them at scale. I don’t need an ML model to do all the heavy lifting of designing the experience, I need it to figure out some of the more context-sensitive aspects because players are capable of approaching my curated game design from 1001 directions.

Now the thing is, generative AI is really good at context-sensitive decision making: we see this through use of few-shot prompts in LLMs to solve problems in context, by processing new information in real-time. It is capable of handling real-time problems, provided they have been scoped sufficiently, and the models trained with this in mind to some degree.

But on the flip side, generative AI is terrible at building meaningful and rich outputs. This is literally where we need good writers, good artists, good designers, to create the context we need. But rather, I don’t want to simply assimilate all of that data into a trained AI model to regurgitate without nuance, I would much rather I can get my model to guide me to it more efficiently.

If we were to focus on the idea of narrative branching and story generation (see above), what a game designer really wants is to make it easier to funnel players to curated content, rather than have to identify every possible way we could reach it, and then dictate that logic. I want my players to experience a handful of really interesting scenarios that fit within my vision, and those scenarios I will craft and design myself. I don’t want them to simply experience whatever derivative output a model can generate, given it can easily rob the player of the authorial intent of the experience - or it’s just painfully unsatisfying.

When we talk about challenges in procedural content generation - which is y’know, what generative AI does but without using machine learning - we often refer to Kate Compton’s 1000 bowls of oatmeal problem, of having each bowl be sufficiently diverse but equally trying to ensure the differences between each are interesting. With generative AI we still suffer from the same problem, except now it’s more like 1000 bowls of Proust: where every bowl I eat reminds me of a better bowl I ate long ago.

[Clearly need to come up with a better name for this concept, it sounds like I’m eating Marcel Proust, and that’s something else entirely, but I digress]

The idea of curating content and guiding towards it extends to decisions and actions: having a generative model that helps contextualise player actions such that meaningful responses, and interesting scenarios occur would be great, but I want complete control of what those scenarios are. Because a stage performance without a script isn’t of much use to me as a designer. Even the most ‘chaotic’ of simulation games like say The Sims are still inherently controlled by a logic-based system that designers have defined, refined, and ultimately dictate.

Having generative AI control behaviour without any constraint or guidance is a nightmare for an artist who has a very specific problem to solve. Conversely, building a rule-driven approach for that solution is not scaling sufficiently. So why not build a solution that bridges the two together? That’s not appetising in an investment climate, so instead what is sold is a system that is very good at guessing the solution, but has no means to validate it. It’s why using code generators or writing assistants results in creators spending hours fixing mistakes, because it has no long-term idea of what you want to do and where you’re going with it - it can only rely on that which precedes it, and the instructions given.

Behavioural systems for game AI require an internal model of the world that is reinforced. I cannot rely on an LLM generating a plan of action given there is still a statistically likely possibility it creates something implausible. Injecting chaos in a world of often total order.

Even when Machine Learning and the like is used in game AI, we have to work around it. Forza’s Drivatar has designer-crafted rules to override its behaviour in specific contexts. Meanwhile Gran Turismo Sophy relies not just on having multiple models being built to handle varying expectations of player skill, but they’re trained with designer expectations of how they should ‘behave’ during play.

I have long felt there is an opportunity to utilise machine learning more in the context of game AI, but only when we reach a point where there is a greater understanding of what ML models do, and can more readily interface with traditional workflows. Hence we’re seeing not just academic research, but also companies like GoodAI and BitPart.ai, trying to find the middle ground. Of understanding where LLMs can be very useful in bridging the gap between having dynamic and reactive gameplay but it still being driven through traditional game AI pipelines.

We talked a about this to some degree with GoodAI’s ‘AI People’ demo last year, and that’s still an ongoing body of work. Meanwhile BitPart have presented at GDC on their technology, and will also be presenting at the AI and Games Conference next week. Not to mention companies like Jam and Tea Studios and their game Retail Mage trying to figure out how this works in practice. I’m glad to see that there is some meaningful conversation happening in this space, and that others - with a lot more resource and money than I - can start exploring this in more detail.

AI Native Games Need Game AI to Work

We’ve seen the likes of InZoi by Krafton or Inworld’s Origins demo advocate for a future of AI-native games, and the use of generative AI as a means to craft entertaining gameplay. But so many of these games rely on using traditional game AI as a mechanism to achieve their goals.

In truth virtually all companies fall back on structured frameworks when they need to make any sort of LLM based interaction work, because LLMs are statistic inference engines - they lack the ability to maintain broader context, understand gameplay structure and larger authorial intent. You play things like Origins and the fantasy falls apart within two minutes because it’s clear there is no narrative design being built around these characters, nor do they feel like they exist in the world. Because LLMs can’t do that without a huge amount of handholding - and a whole stack of game AI systems to support it.

Part of this is because these demos lack the necessary polish around the rest of the product: the voice work is wooden and lacks weight, the animations need polish, the character designs lack any excitement - god so many of those demos love Metahuman huh? But it’s the failures of context that always stick out, and they come in many forms: be it in spatial awareness, of blocking principles such that they stand and engage with players correctly, but also of understanding what a player may do in that environment. You meet generative AI NPCs that have zero understanding of the world around them, making whatever conversation they drum up feel even more artificial and pointless than it already is. And these are all issues that traditional game AI has already addressed, where we sit with narrative designers, game designers, animators, and voice actors working through the expectations of how that character should exist within that space such that it feels authentic.

This also raises a problem, in that returning to those base level of player expectations, one of the most sure-fire ways to highlight the limitations of an NPC is to make it conversant courtesy of a large language model. Because players will implicitly assume a level of intelligence to an avatar in a game world because you make it capable of having a conversation - for lack of a better term. It’s the exact same problem I mentioned earlier that modern Assassin’s Creed (and similar open world games) is contending with, but now you added the complexity of pretending the NPC can have a conversation with you?

I distinctly recall playing Origins weeks in advance of public launch courtesy of the PR firm working with Inworld, and I said at the time ‘this needs another six months of work’, because the fact these are static and immobile NPCs makes the whole thing feel shoddy. Because putting an LLM into the game and getting it to speak to the player is only half of the problem.

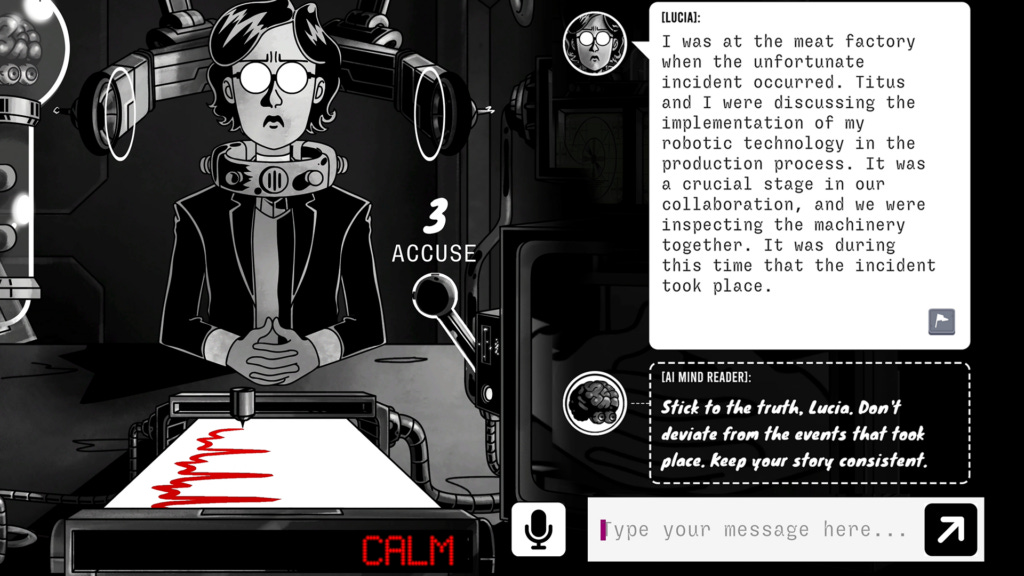

But the use of classic game AI frameworks doesn’t extend solely to the avatar of the LLM in the game world, it extends to the dialogue systems themselves. Studios such as Meaning Machine, creators of the upcoming murder mystery game Dead Meat, have gladly highlighted their need to ‘bully’ their NPCs through director-like architectures in order for that game to make sense (and are talking about it at our event next week). Now Dead Meat benefits from an interface within which the characters are in a fixed position, and the art requirements are minimal. But still, the player is engaging in a dialogue, and the LLM simply cannot retain all the relevant information about the world and current conversation, such that it retains the context necessary to ensure that experience stays consistent and engaging. In fact, we’ll have Meaning Machine join us on the newsletter very soon to discuss some of this in practice.

But returning to these NPCs in 3D environments, we’ve had the likes of Inworld or NVIDIA with their ACE platform insisting for the past two years that the future is through use of their generative technologies. But even there, when you look at the backend of these tech stacks, they have these structures - one might even call them rules - placed upon them in order for them to be practical. But that’s only for the narrative framing. More often than not they still suck as NPCs because all of the game AI considerations from 5000 words ago are still not being addressed.

The one time I thought a generative AI NPC bordered on being interesting was when Ubisoft invited me to try out their NEO project at GDC in 2024. As I discussed at the time, the devs spent months trying to fit what was an Inworld tech stack into a framework that makes sense for game AI. It built it into something that felt much more like you’re interacting with an NPC ins something like Assassin’s Creed or Watch Dogs and while it was lacking - on account of being a few months of work - it was far more interesting because they’d put so much more thought into what the generative components are useful for, and where to fall back on their expertise.

Wrapping Up - For Now

I’m conscious I could write a lot more about the generative AI part of this essay, a lot more. Particular on the advocacy and bad faith arguments raised about using the technology for game AI.

But to repeat: while I do think there is value in generative AI methods, and we will find sensible and ethical adoption over time, it’s important to close this out by stating generative AI is not the solution to the problems that game AI faces.

Rather, game AI is the solution to the problems generative AI faces.

This point needs reinforced, and communicated on repeat, and frankly until games actually start shipping with these lessons learned, the conversation isn’t really going to evolve. In a time when we rely on established convention, as budgets and timelines are tight, we need to continue to experiment and explore to figure out solutions to game AI’s challenges, but stop looking to generative AI as this silver bullet.

Now I have it on good authority, that a lot of big AAA teams have been exploring generative AI in various capacities, even in game AI corners - and for many it is simply not good enough. What you perhaps make up for in an ability to respond to different circumstances more readily, is brought down by a lack of design authorship, often poor performance and latency, never mind the costs of running these models if you’re still running them on the cloud.

In reality, what value generative AI will bring is working in conjunction with existing game AI systems to work around the cracks or limitations of them. Having machine learning models - be they generative or otherwise - more readily respond to gameplay events, to capture edge cases that are difficult for designers to express, or recognise contextual behaviours by players in ways that feel congruent and sensible. Building new behaviours for one or more characters to execute at once in ways that are appropriately staged and constructed but lifted from designer templates.

All of that that sounds sound like a valuable thing to explore - and it’s exactly the sort of thing machine learning is suited for. But in the execution, it needs to be performant, and it needs to be easy to edit, and tweak to a designers needs.

As this bubble continues to grow, with cash coming into this space, investoment should be focussed on figuring out where game AI and ML can meet in the middle in a way that actually works for us all. Rather than this naïve insistence that the new shiny thing will replace decades of expertise in the sector.

Heck, give me the money, and I’ll get to work on it. Clearly I have an opinion or two on this issue.

The Crisis Will Continue

So this is just the first part of a much larger essay I’ve been working on, and in truth when I started writing it all down I didn’t suspect it was going to be as long as this. So I’m glad I let myself break this up somewhat.

In Part 1 I have discussed the challenges faced by Game AI as a field, but for Part 2 - which will appear in your inboxes next month - the focus will be on AI education.

The impact on changing trends in AI are having on higher education syllabus.

Of cultural changes dictating government policy.

How game AI education is a precarious sector held aloft by a handful of inviduals with the best of intentions.

Plus the impact all of this is having both on the present and future of game AI.

Naturally as someone who spends a lot of time educating others, and had a 10-year career in academia, I have a lot of thoughts on this.

That’s Enough For One Week

I clearly had a lot to get off my chest this week, but that’s us for now. I need to get back to the conference prep!

Thank you for sticking with me to the very bottom of this issue, it’s been a very long piece, and I hope you found it engaging!

I look forward to seeing some of you at our conference on Monday, and for everyone else we’ll be back with another edition on Wednesday.

Well, maybe Thursday… depends how tired I am.